Summary

Hey! I'm Mohammad, a full-stack software engineer and new grad from the University of Maryland, currently based in New York City, NY. I got my start building web apps when I was 7, shipped my first mobile app to Google Play at 14, and graduated with a B.S. in Electrical and Computer Engineering at 18. For most of college I was working two or three engineering roles, mentoring students, leading projects, and overloading on courses. I said yes to anything that seemed interesting and shipped production software at research labs, hospitals, defense contractors, and startups.

I chose ECE over CS because I'd been building software for years and wanted to understand how computing works from the hardware up. So alongside full-stack development with React, Flutter, FastAPI, and AWS, I also came up through circuits, embedded systems, and signal processing. I've written microcontroller firmware, built real-time data pipelines, and built research systems that record and stimulate living brain tissue in real-time. That gives me an engineering instinct that starts well below the application layer, and it shows in how I approach performance, system design, and real-world constraints.

This portfolio goes more in depth than a resume can. For shortened bullet points or a quick read, my resume is better suited for that.

What I Work With

These are the tools I've built real things with and know well enough to be productive on day one. I tinker with plenty more, but these are the ones I keep coming back to.

Experience

Joined as the first in-person engineer at an LLM observability startup, the infrastructure layer that lets companies monitor their AI systems in production, catch when response quality degrades, and audit every interaction for compliance. Maintained the core evaluation platform for HIPAA-regulated healthcare companies using LLM-powered tools for patient interactions, building monitoring pipelines where every prompt and response required audit trails, BAA compliance, and quality guarantees since degraded AI outputs create liability, not just poor user experience. Simultaneously shipped new product directions during the pre-seed to seed transition, delivering three complete pivots in six months with one-to-two week demo cycles. The first pivot was an API testing tool where users plug in their AI endpoint and we simulate hundreds of user personas automatically, elderly patients skeptical of technology, frustrated users with urgent symptoms, edge cases impossible to manually QA, with AI evaluators analyzing responses and generating reports on failures and hallucinations. The second pivot was browser-based testing where users describe test sequences in plain English like "try to checkout with an expired card" and an AI agent crawls the site, builds a sitemap, navigates to the target page, and executes interactions on live UI. Deployed on AWS with CloudFormation provisioning infrastructure and Fargate, EKS, and ECS running containerized browser sessions in parallel, BrowserBase handling the automation layer, technically challenging because real websites have dynamic content, auth flows, and popups that crash autonomous agents constantly. The third pivot was an adaptive learning space where users upload materials, describe what they want to learn, and the system generates complete courses with AI-synthesized podcasts, quizzes, and voice-based roleplay where course builders define personas like "act as an interested customer" for sales enablement training. Built a Chrome extension similar to Scribe that records user actions across websites with AI-analyzed screenshots, letting other users replay guided walkthroughs in their browser. Architected the backend using LangGraph state machines with 15+ nodes orchestrating quiz generation, podcast synthesis via ElevenLabs and Cartesia, and roleplay conversations, with pgvector for semantic search over uploaded documents. Implemented real-time streaming via tRPC with PostgreSQL session persistence. Full-stack TypeScript: React 19 frontend, Hono API layer, Prisma for database operations, BullMQ with Redis for async document parsing and audio generation, multi-tenant auth through WorkOS and Clerk, with R2 and S3 for object storage and Supabase for additional data layer.

Led a team of 12 engineers building TrachHub, a real-time monitoring system for pediatric tracheostomy patients where airway blockages can be fatal within minutes if undetected. Designed the distributed system architecture: a wearable CO₂ sensor streams data via Bluetooth to a Raspberry Pi edge hub running real-time obstruction detection locally, syncing to cloud infrastructure and triggering alerts through a Flutter mobile app for parents and a React clinical dashboard enabling nurses to monitor multiple patients simultaneously. Oversaw development of four production applications processing 5.2M sensor samples daily: a React touchscreen GUI on the Raspberry Pi hub handling Bluetooth device pairing, adaptive calibration, and real-time CO₂ waveform visualization; a Flutter mobile app with emergency push notifications over cellular failover; a React multi-patient monitoring dashboard for clinical staff; and a React portal for patient registration and device provisioning. Implemented fault-tolerant edge-cloud architecture using Python, FastAPI, and TimescaleDB for high-frequency medical time-series data, with MQTT and WebSocket protocols enabling seamless synchronization and WiFi/cellular failover ensuring uninterrupted monitoring during network degradation. Developed embedded C++ firmware on ESP32-S3 microcontrollers implementing DSP algorithms including adaptive thresholding, RMS analysis, and signal filtering with hysteresis, achieving sub-40ms obstruction detection latency at 60Hz sampling rates. Optimized BLE transmission protocols to achieve 2-week battery life and 100m range while maintaining HIPAA compliance through SSL/TLS encryption, secure boot, and disk encryption. Validated system performance using phantom simulators generating physiological CO₂ waveforms, meeting FDA regulatory standards at $98 unit cost for scalable clinical deployment. Deployed UPS-backed edge infrastructure with Cloudflare tunneling for secure remote access, enabling 24/7 monitoring across hospital and home environments.

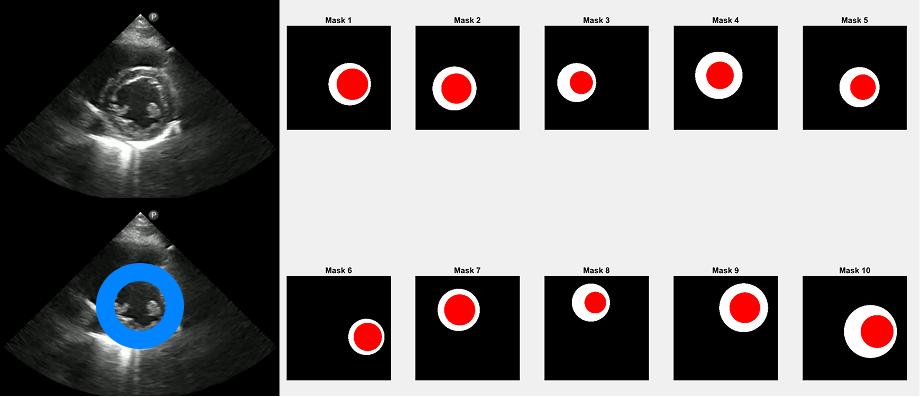

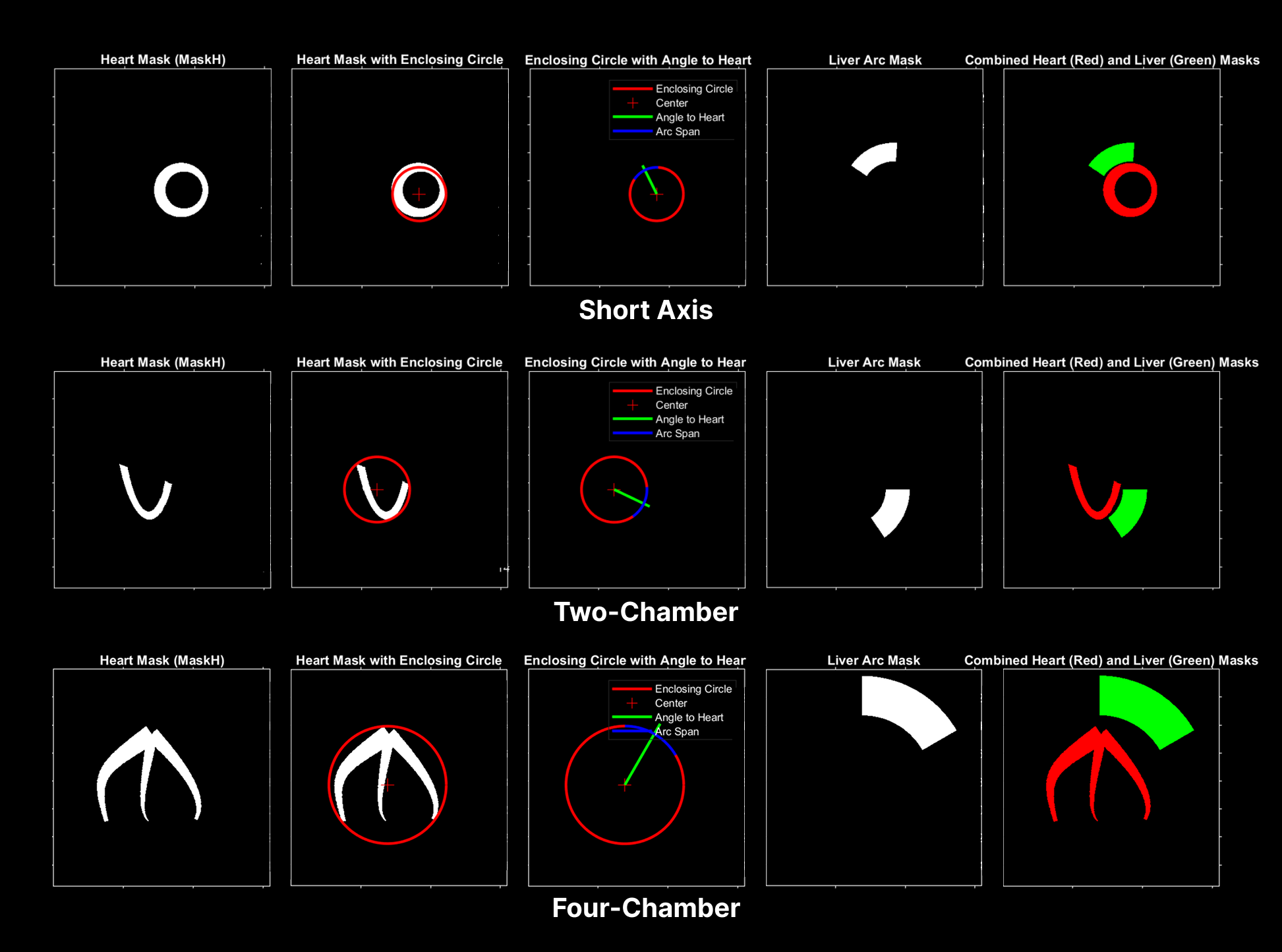

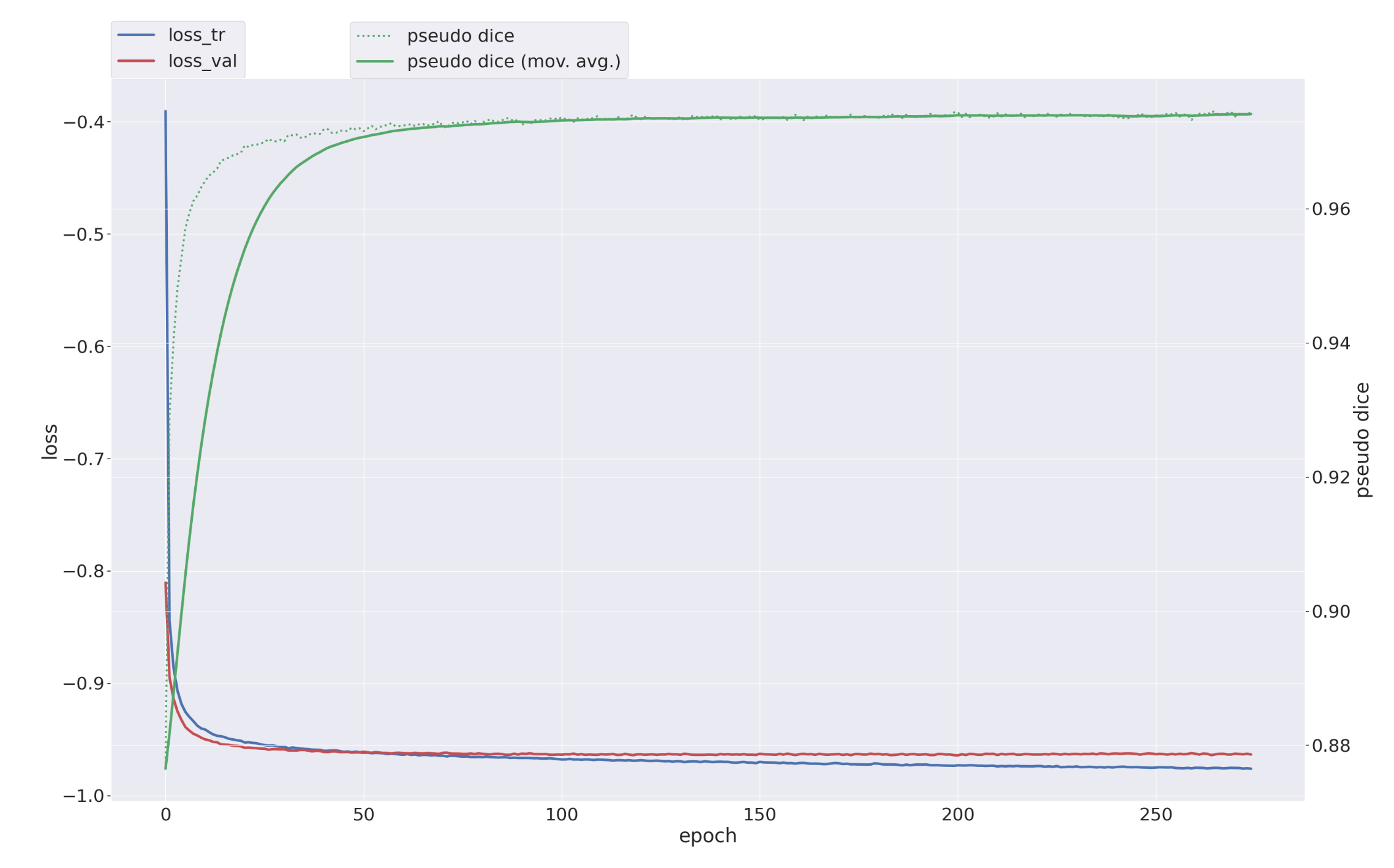

Developed a deep learning pipeline for AI-powered cardiac MRI analysis as part of an undergraduate research program within the Biomedical and Metabolic Imaging Branch at NIDDK, focused on detecting early heart dysfunction through automated segmentation and strain mapping of heart tissue. The core problem this research addressed is data scarcity: training neural networks to analyze medical images requires thousands of labeled examples, but real patient cardiac MRI scans are expensive to acquire, require expert annotation, and raise privacy concerns, creating a fundamental bottleneck for medical AI development. To solve this, I built a Python and MATLAB simulation platform that generates synthetic training data indistinguishable from real scans, producing 708 datasets across three cardiac views (two-chamber, four-chamber, and short-axis) by implementing computational phantoms using Bézier curve mathematics to model anatomically realistic heart shapes with proper tissue thickness variation, soft-tissue biomechanics, and motion artifacts that mimic actual tagged MRI acquisitions. Engineered an end-to-end data pipeline processing 885+ NIfTI medical imaging files (the standard file format for medical imaging, similar to how JPEG works for photos) using SimpleITK for 3D visualization and Python multiprocessing parallelized across 64 CPU cores, handling anatomical mask generation, liver positioning algorithms, and conversion to the structured directory format required by nnU-Net, a state-of-the-art neural network architecture for medical image segmentation. Trained the model on an NVIDIA Quadro RTX 6000 GPU, achieving 95.1% Dice coefficient (the standard accuracy metric measuring overlap between predicted and actual heart boundaries) with 2.4% volume error and sub-50ms inference time, representing a 71% improvement over prior physics-only baseline methods. Architected modular libraries separating deformation generation, mask creation, and signal simulation, implementing validation across 50 randomized test samples to confirm the synthetic data approach enabled robust model generalization across cardiac views.

Built a cross-platform mobile application using Flutter for first responders operating in dangerous or remote field conditions where reliable connectivity cannot be guaranteed. The core product problem was that emergency personnel needed to log their location before entering hazardous situations, send SOS alerts with rich multimedia context if something went wrong, and have all of this work even when cell service drops entirely. Implemented an offline-first architecture where the application maintains full functionality without network access, caching GPS coordinates, geo-tagged images, videos, and incident notes to local storage, then automatically synchronizing with backend APIs when connectivity resumes. Engineered the real-time GPS tracking system with asynchronous programming patterns to continuously log location data during active trips without blocking the UI or draining battery through inefficient polling. Integrated OpenStreetMap and GIS technologies for incident mapping and visualization, allowing responders and dispatchers to see precise locations overlaid on detailed terrain maps rather than raw coordinates. Designed a hybrid storage architecture combining SQL databases for structured relational data with NoSQL for flexible document storage, implementing ACID-compliant write operations to ensure data integrity even during unexpected app termination or device failure mid-write. Built secure communication infrastructure using RESTful APIs with SSL/TLS encryption for all data transmission between mobile clients and cloud backends. The multimedia handling system processed geo-tagged images and videos captured during emergencies, attaching precise location metadata and optimizing encoding for transmission over limited bandwidth connections. Worked within an established development workflow using Bitbucket for version control, Jira for issue tracking, and Confluence for technical documentation, conducting testing cycles focused on edge-case reliability in low-connectivity environments where traditional debugging approaches fail.

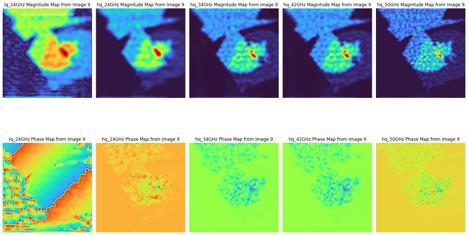

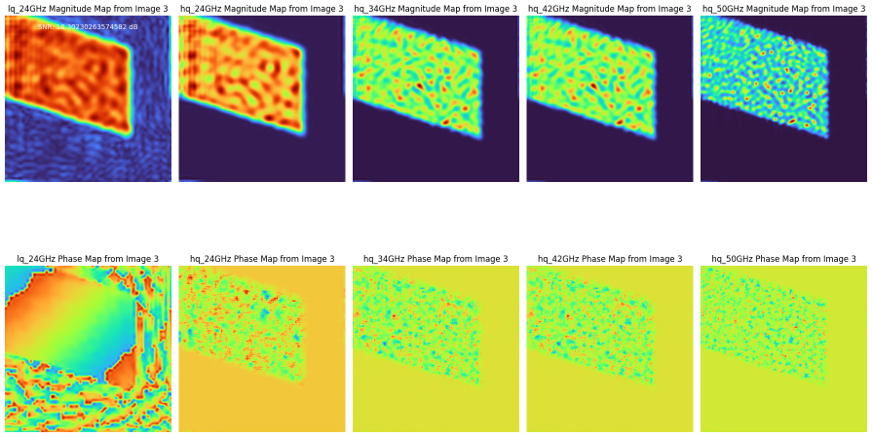

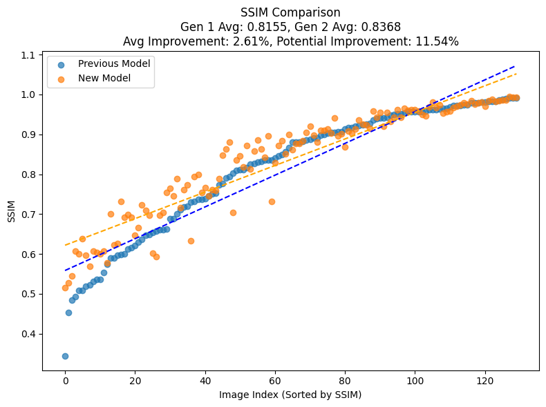

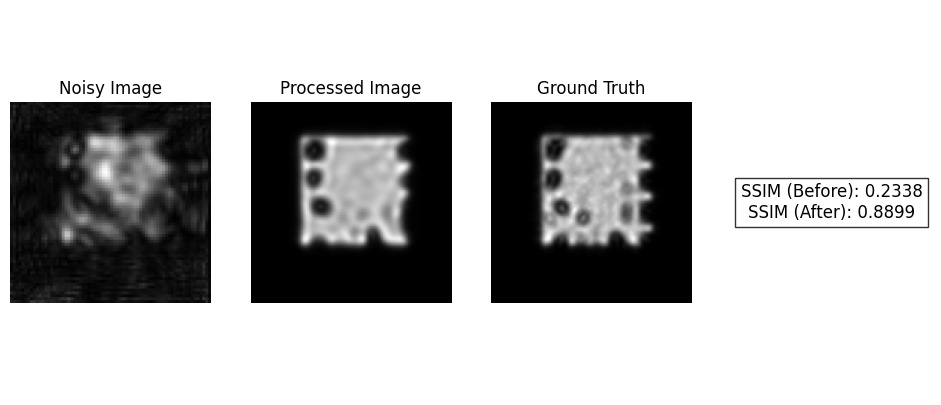

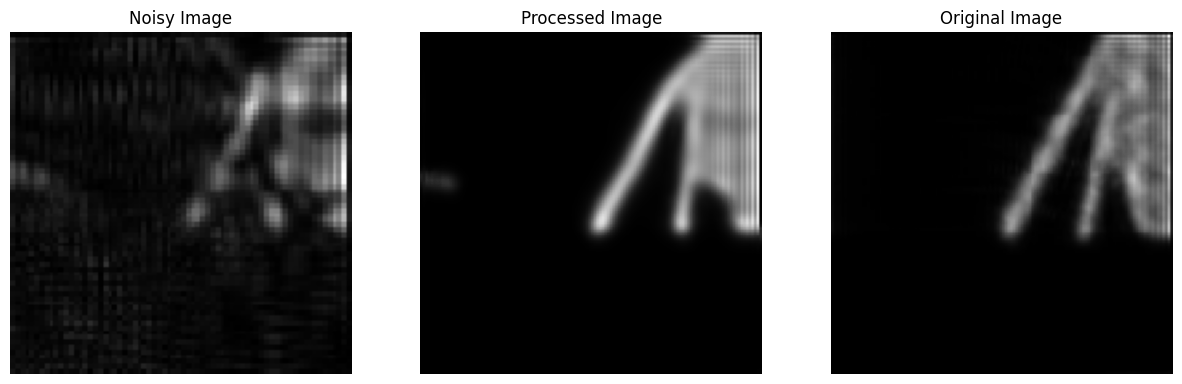

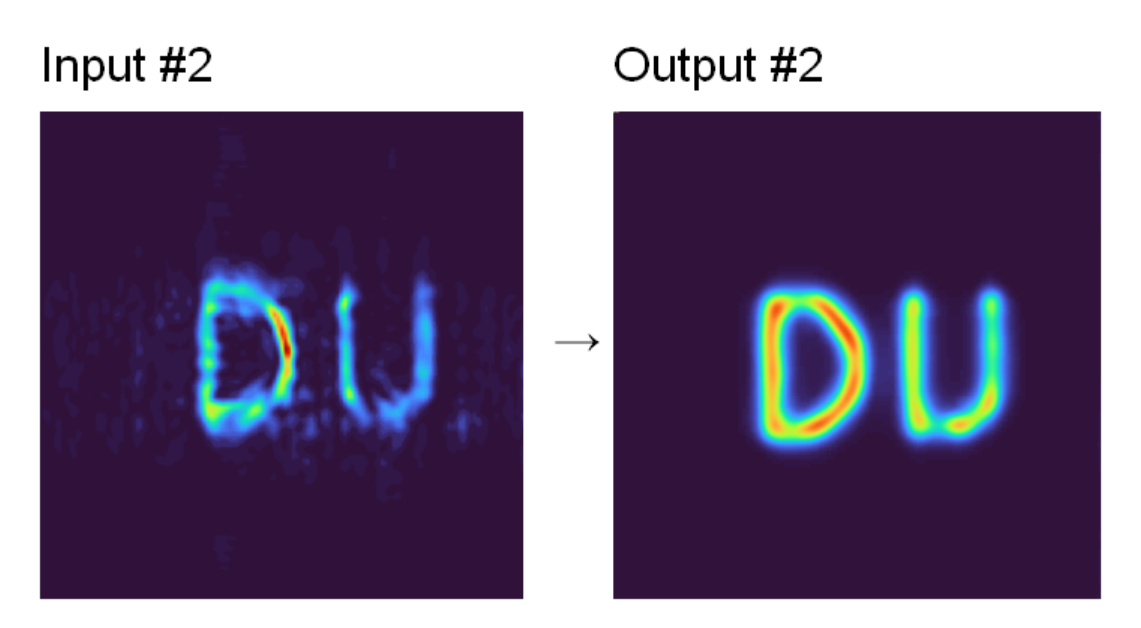

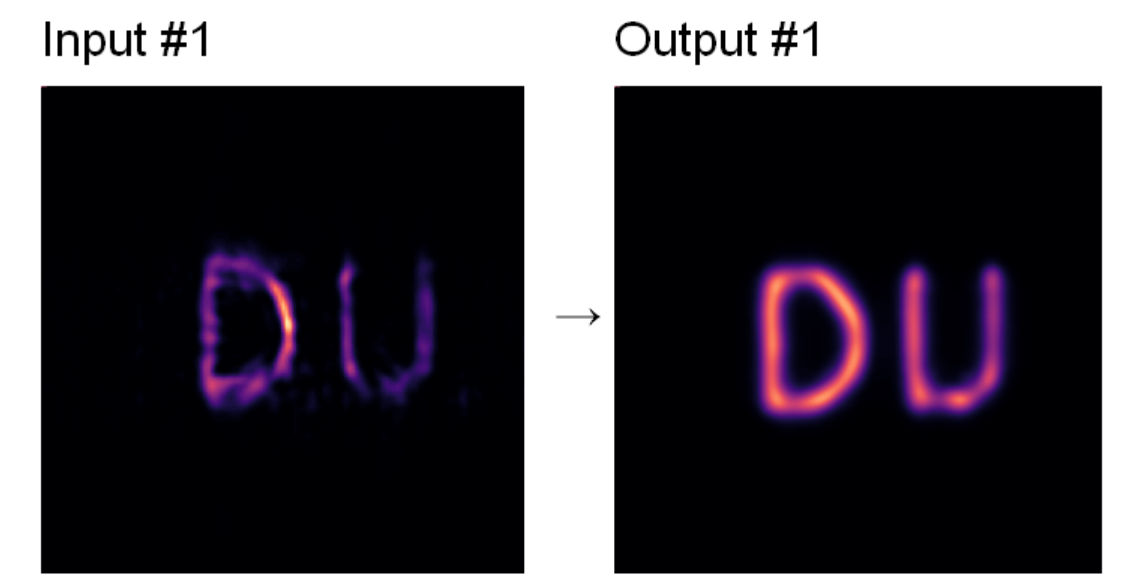

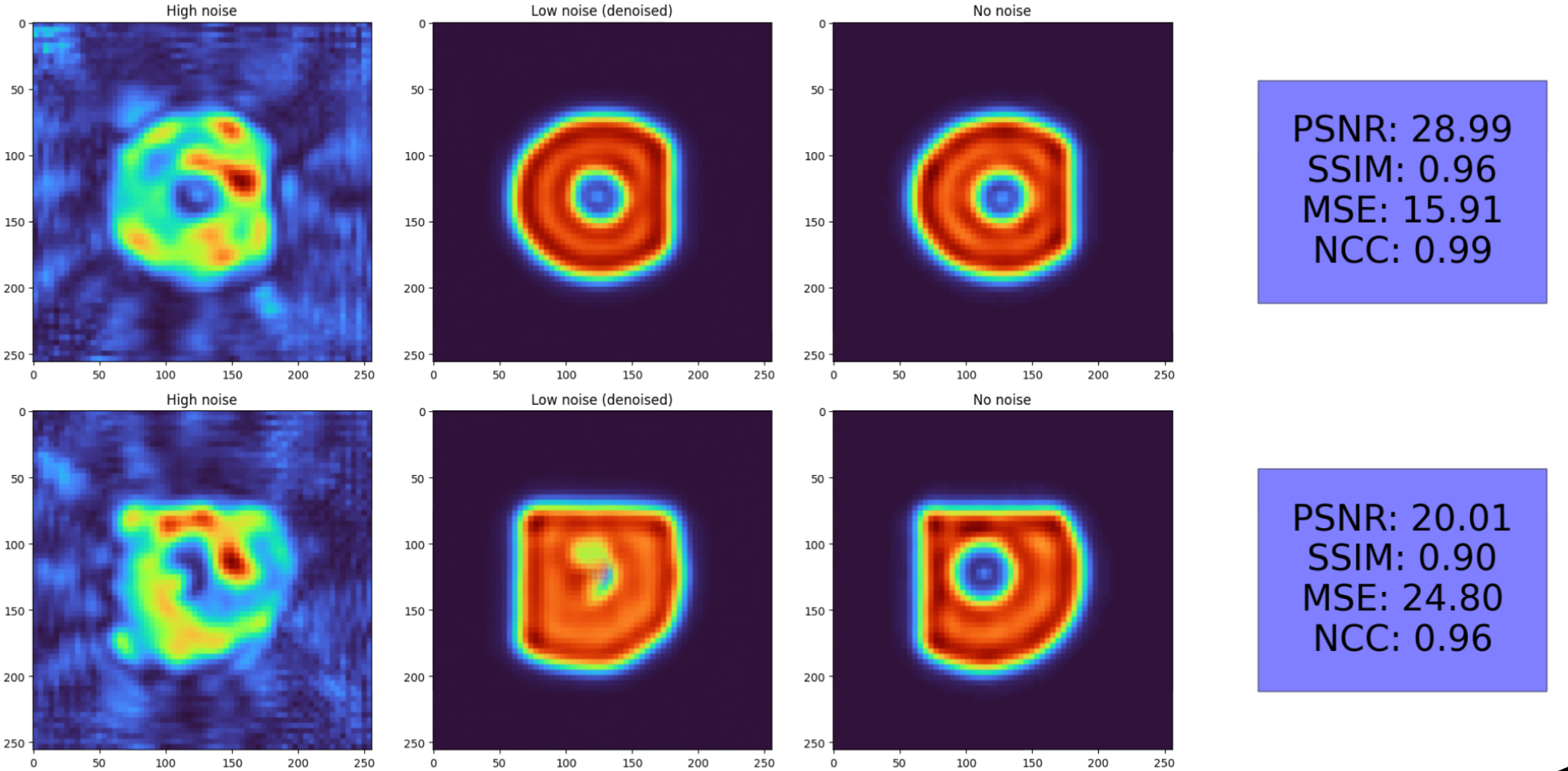

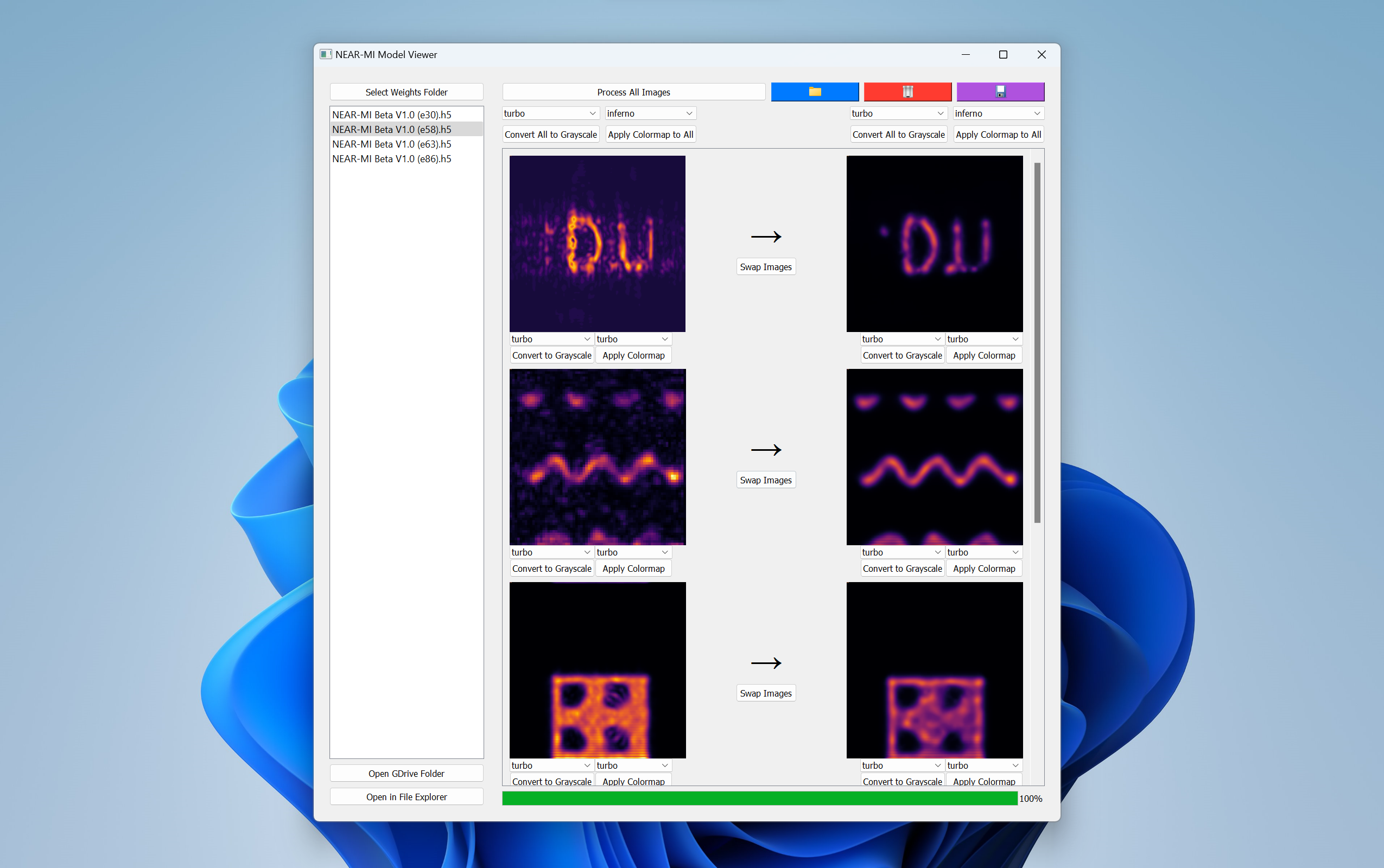

Transitioned from academic research into commercial R&D when the lab's through-wall imaging work spun out into Resolv Technology, a startup aiming to bring affordable handheld microwave imaging devices to consumers. Where the research phase established the data pipeline and baseline models, this role focused entirely on advancing the NEAR architecture to production-grade performance. The core challenge was that microwave imaging captures complex-valued signals containing both magnitude and phase information, and standard image denoising approaches that treat inputs as simple grayscale pixels lose critical electromagnetic data.

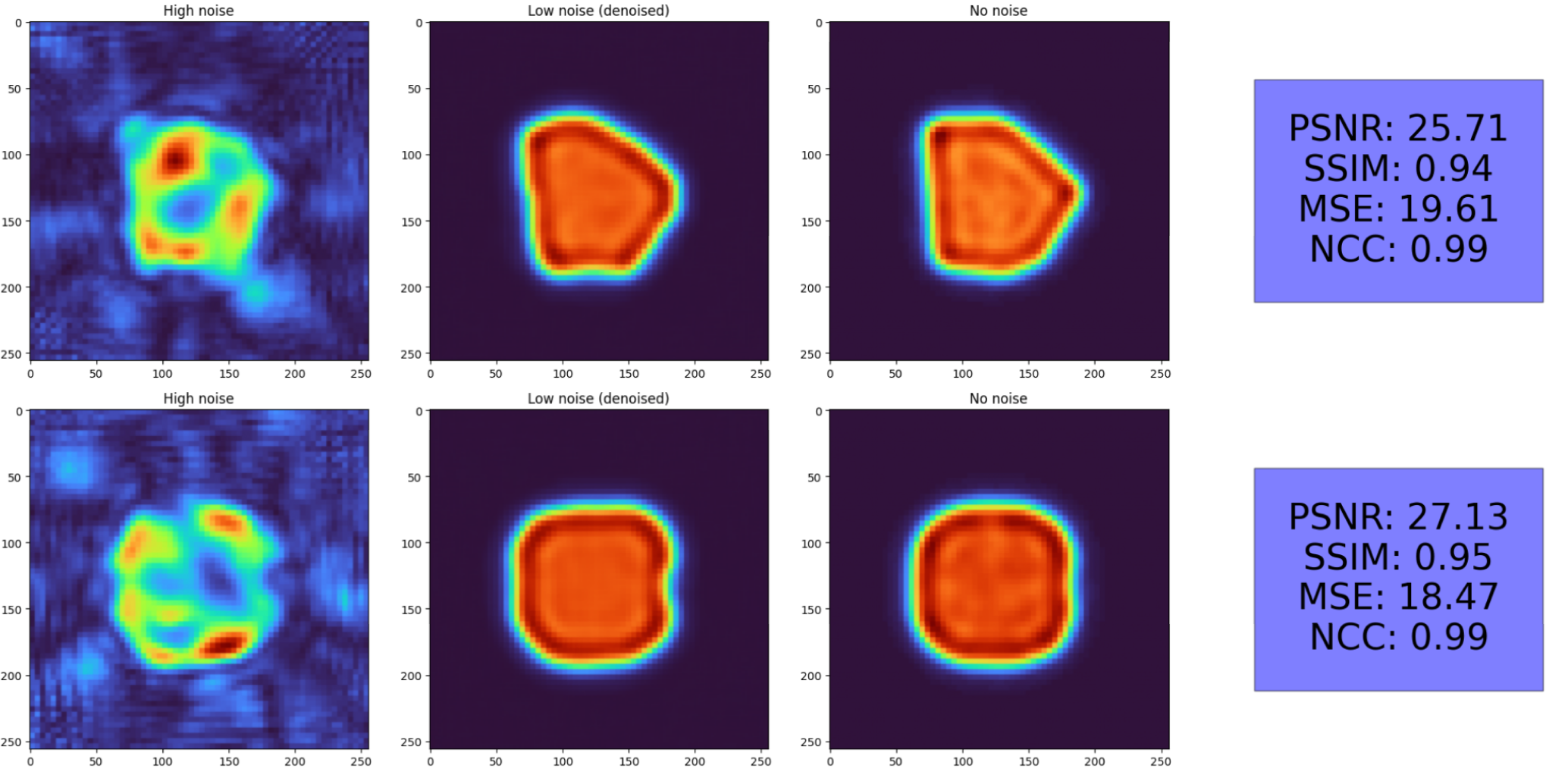

Redesigned the architecture around dual-channel processing that separates phase and magnitude streams, implementing transformer-based modules with pyramidal attention mechanisms to extract hierarchical features across multiple scales before fusing the channels for final reconstruction. Scaled models from the original 49K parameters to 54M using Swin Transformer backbones, systematically running ablation studies across nine architecture versions to isolate which components contributed most to reconstruction quality. Engineered custom loss functions combining Charbonnier loss for outlier robustness, SSIM for structural similarity, perceptual loss for feature-level fidelity, and edge loss for preserving fine boundaries, training with mixed-precision workflows and CUDA optimization that accelerated convergence by 40%. Final models achieved 96% reconstruction accuracy measured by SSIM, +18dB signal improvement in PSNR, and sub-second inference times suitable for real-time deployment. Packaged the complete pipeline as a deployable Python class that experimentalists could integrate directly into their reconstruction workflows, taking raw microwave measurements through Fourier-domain processing and neural network denoising in a single API call. Contributed technical writing to the NSF SBIR grant proposal supporting continued development.

Joined an REU program developing through-wall imaging technology: a notebook-sized handheld device you hold flush against a wall that reconstructs hidden objects using metasurface antennas, compact flat arrays that manipulate microwave signals without the bulk, radiation exposure, or cost of traditional X-ray systems like TSA security scanners. The core challenge was that commercial deployment restricts operation to the 0.25 GHz ISM band, and this severe bandwidth limitation produces images where a screwdriver behind drywall appears as an indistinct smear with speckle noise, diffraction rings, and ghosting artifacts rather than a recognizable shape.

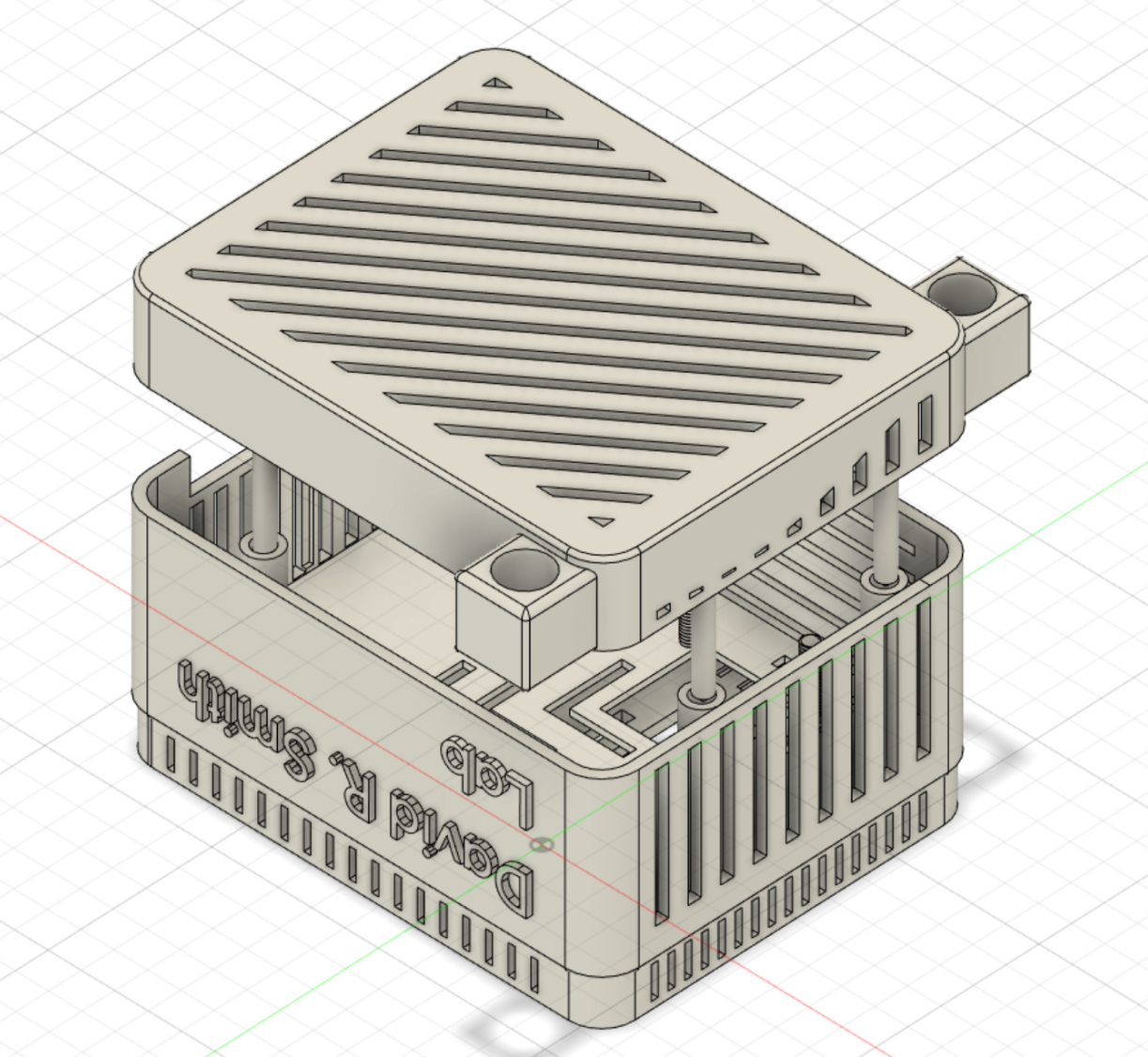

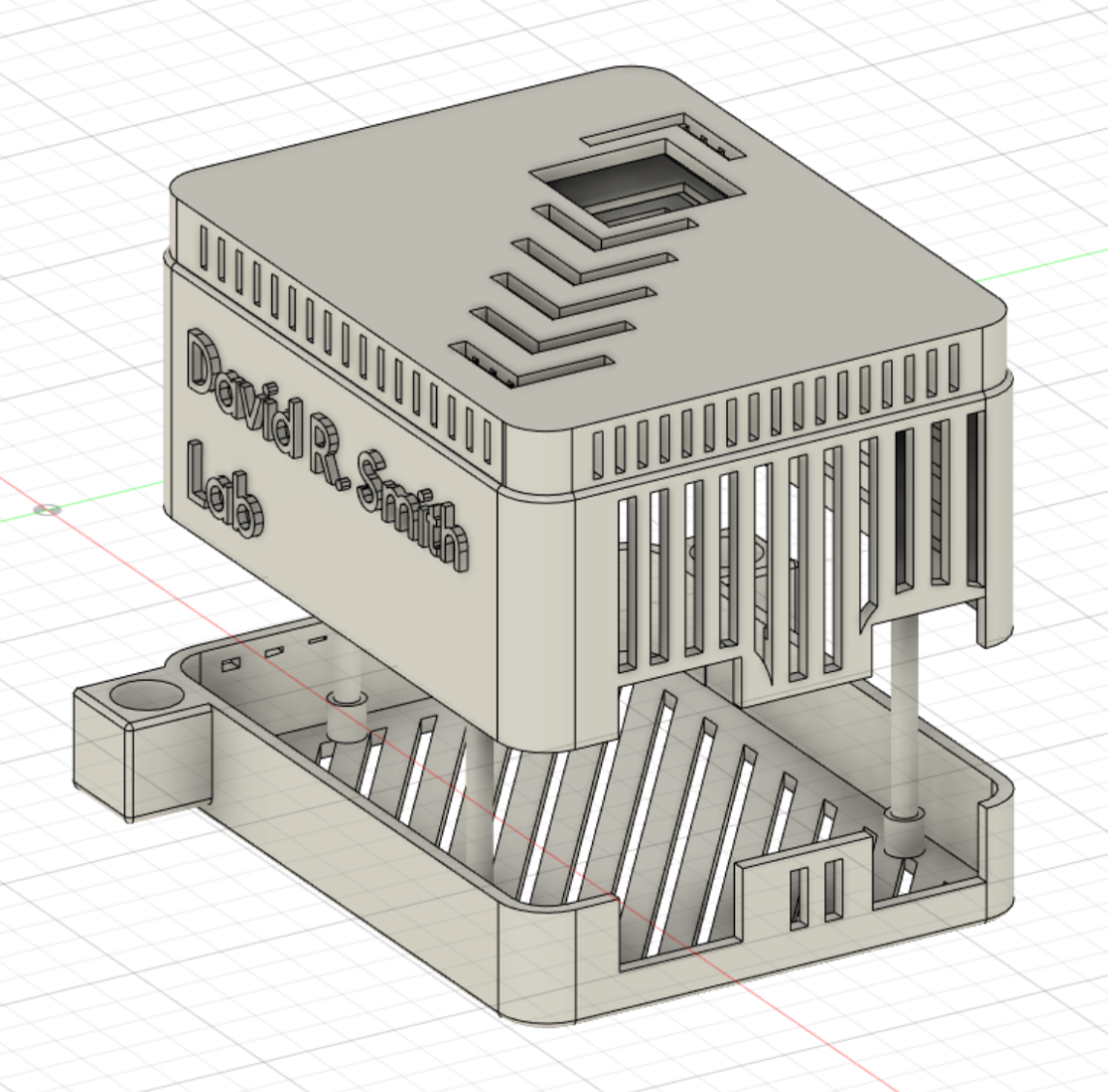

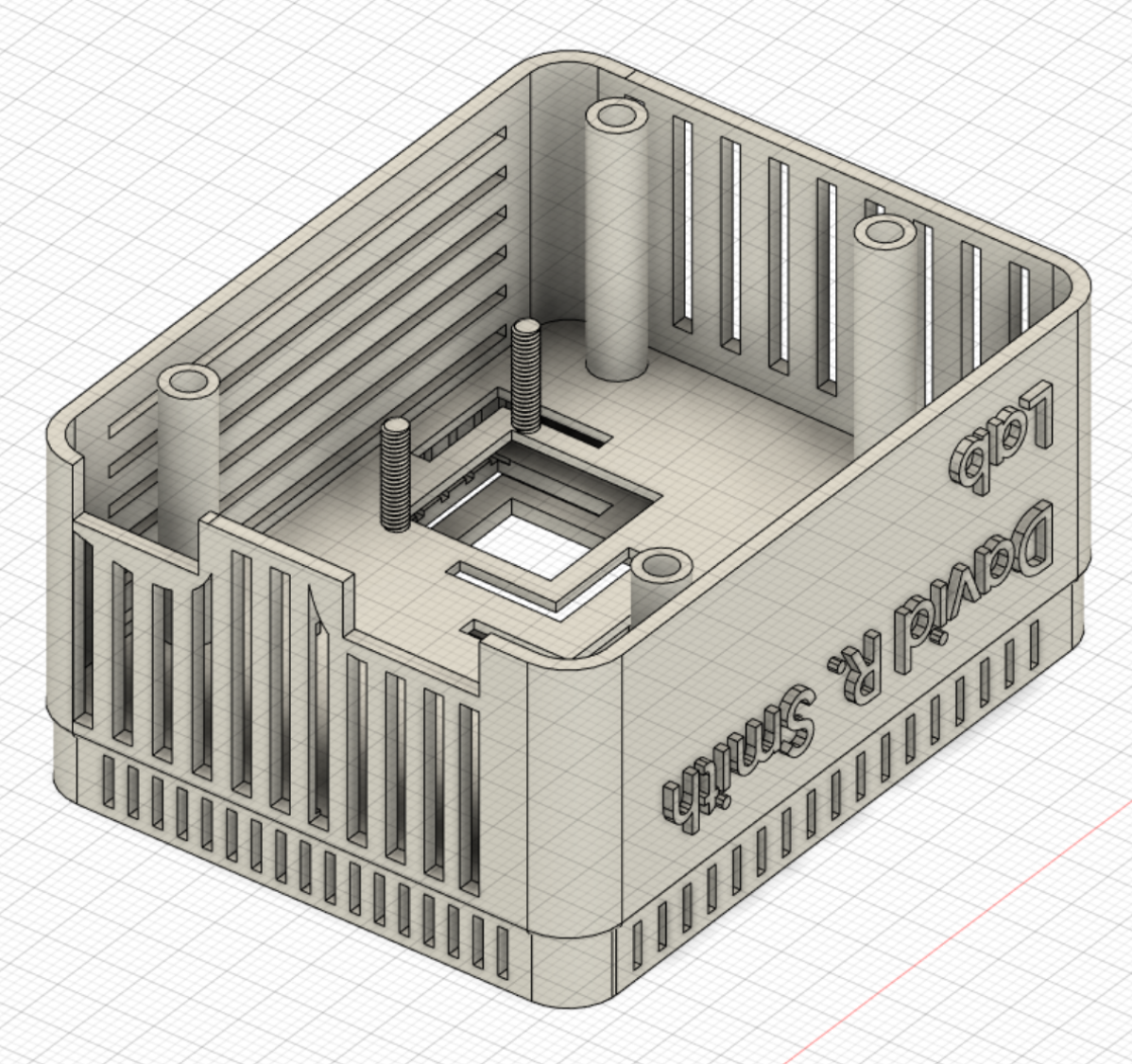

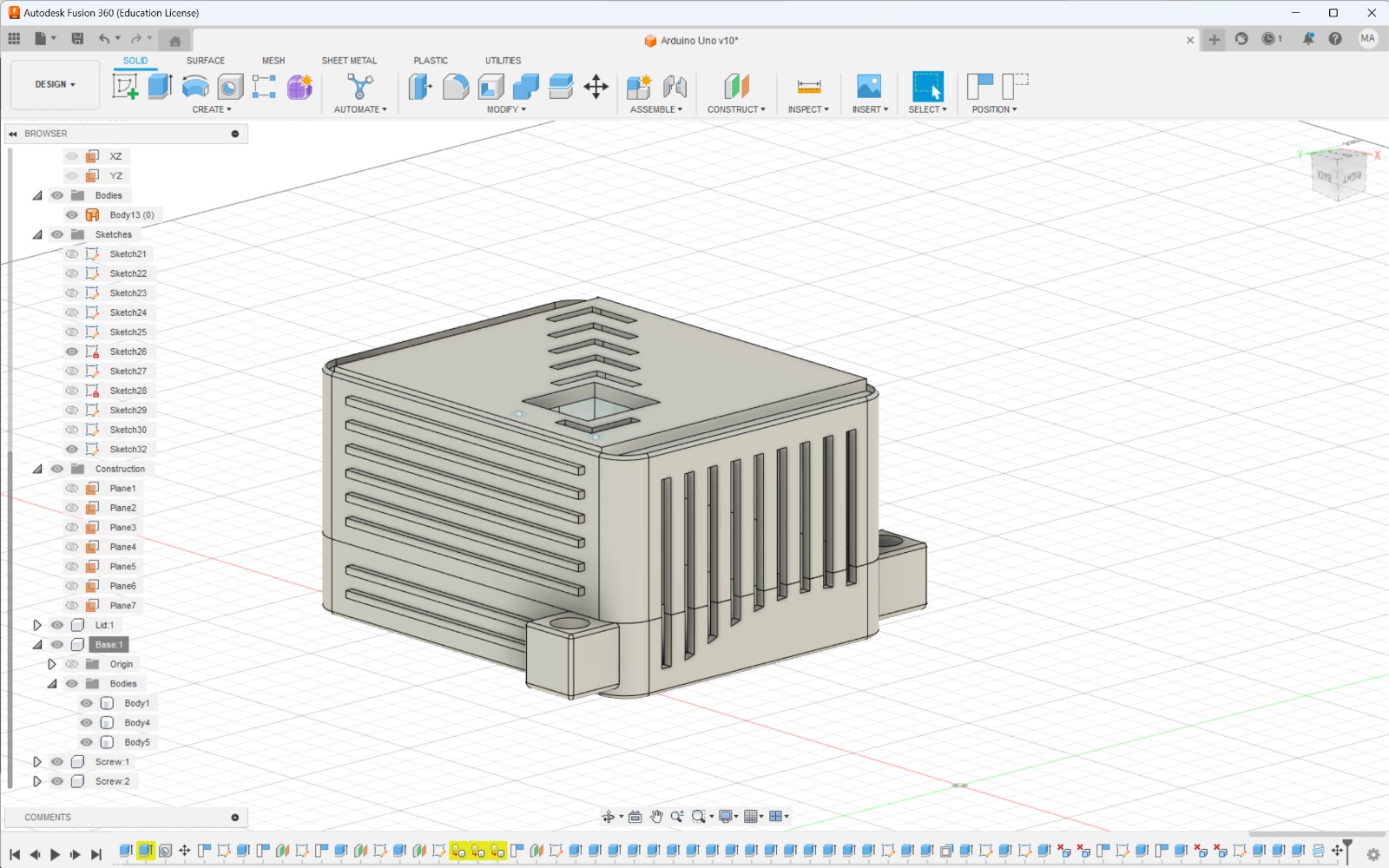

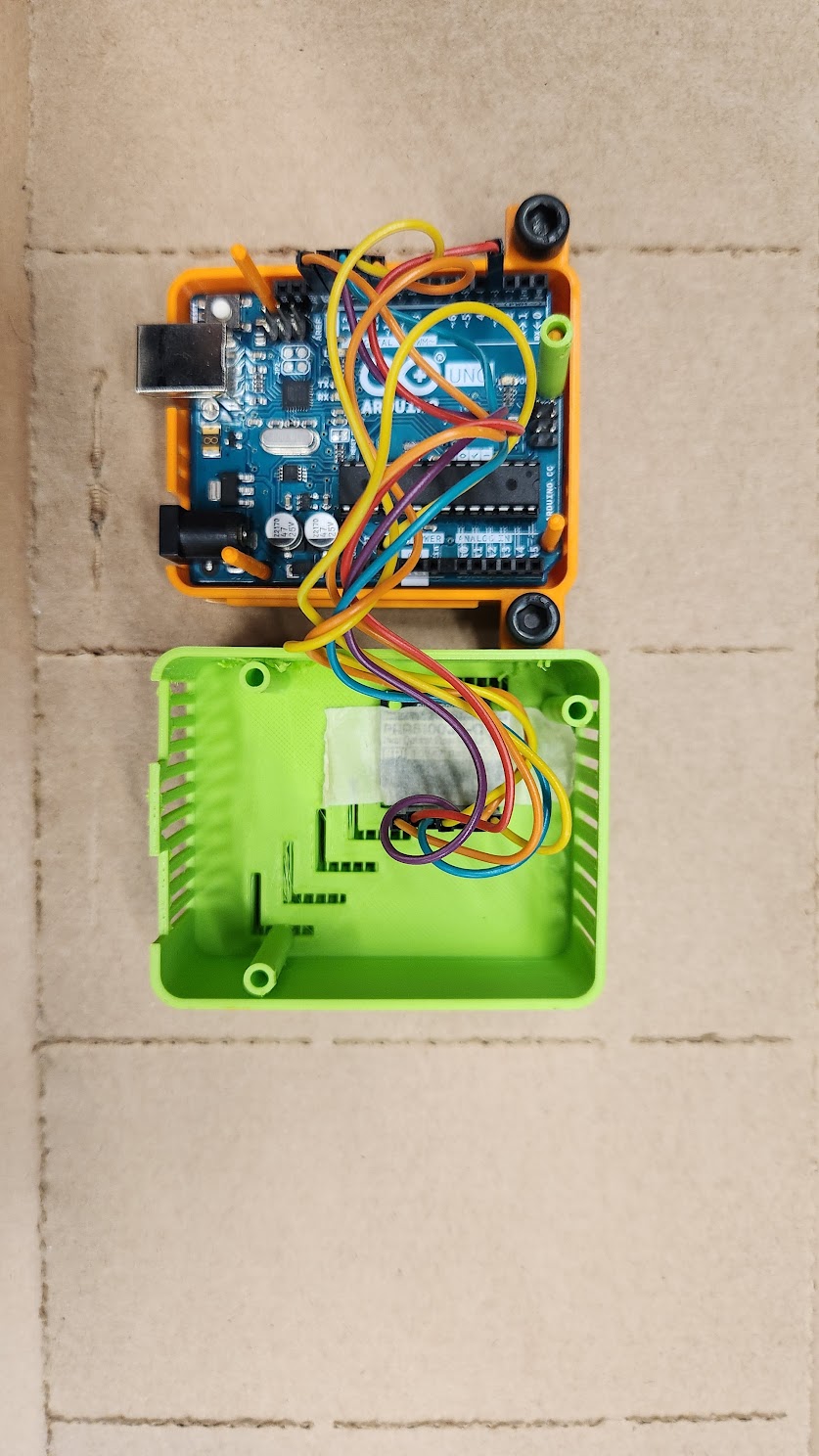

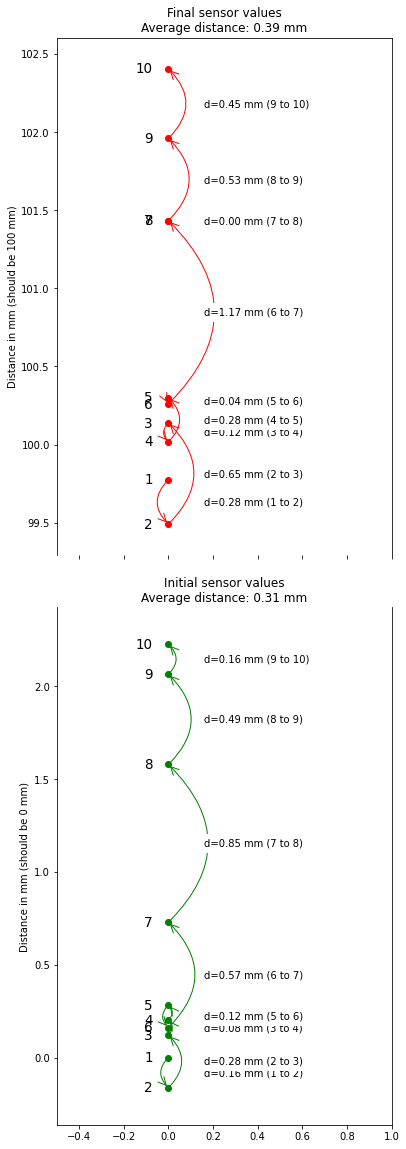

My role spanned hardware integration and foundational ML work for signal reconstruction. On the hardware side, the antenna must move in precise increments of roughly half the operating wavelength per the Nyquist sampling theorem, meaning positioning errors directly corrupt image quality. I developed a high-precision optical sensor system using the PMW3901, writing Arduino firmware for motion counting and a Python API handling calibration, resets, and continuous logging during scans, achieving ±0.2 to 0.6mm spatial accuracy validated across grid positioning tests. I designed 3D-printed mounts in Fusion 360 securing the sensor to the antenna stage with stable alignment and minimal vibration.

For training data, I built a Python pipeline using OpenCV to programmatically generate hundreds of thousands of random polygons simulating arbitrary hidden objects: pipes, studs, wiring, tools, weapons, rodents, water damage patterns. These fed into an electromagnetic simulation framework I retrofitted into a highly parallelized pipeline utilizing all 64 CPU cores at 100% utilization with optimized interprocess communication via shared memory and queue batching, generating 200K+ paired examples of degraded low-bandwidth images against clean high-frequency ground truths. Using this dataset, I developed nine successive model architectures scaling from 49K to 54M parameters, implementing U-Net structures with attention gates and squeeze-excitation blocks to progressively improve noise reduction and feature preservation.

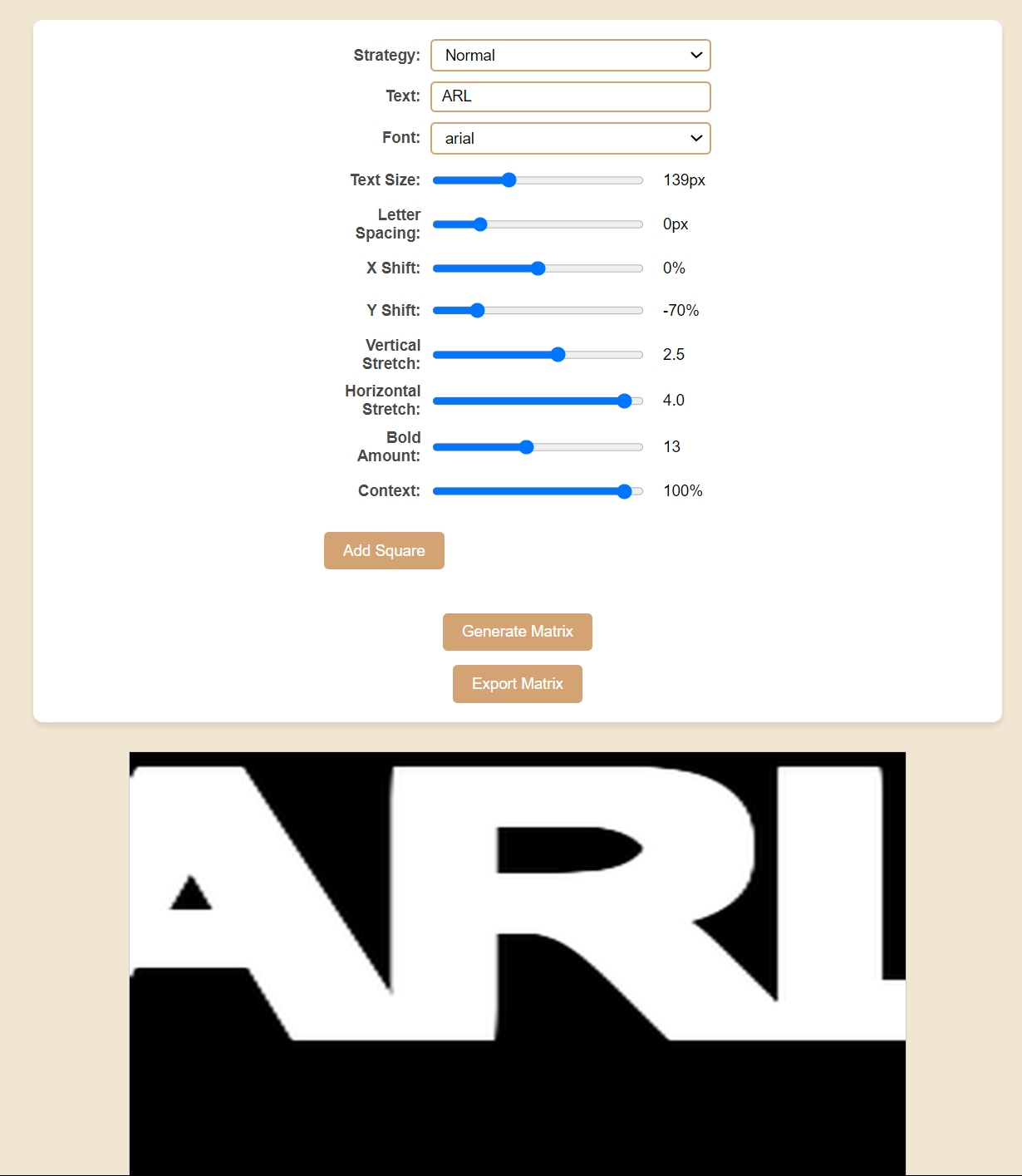

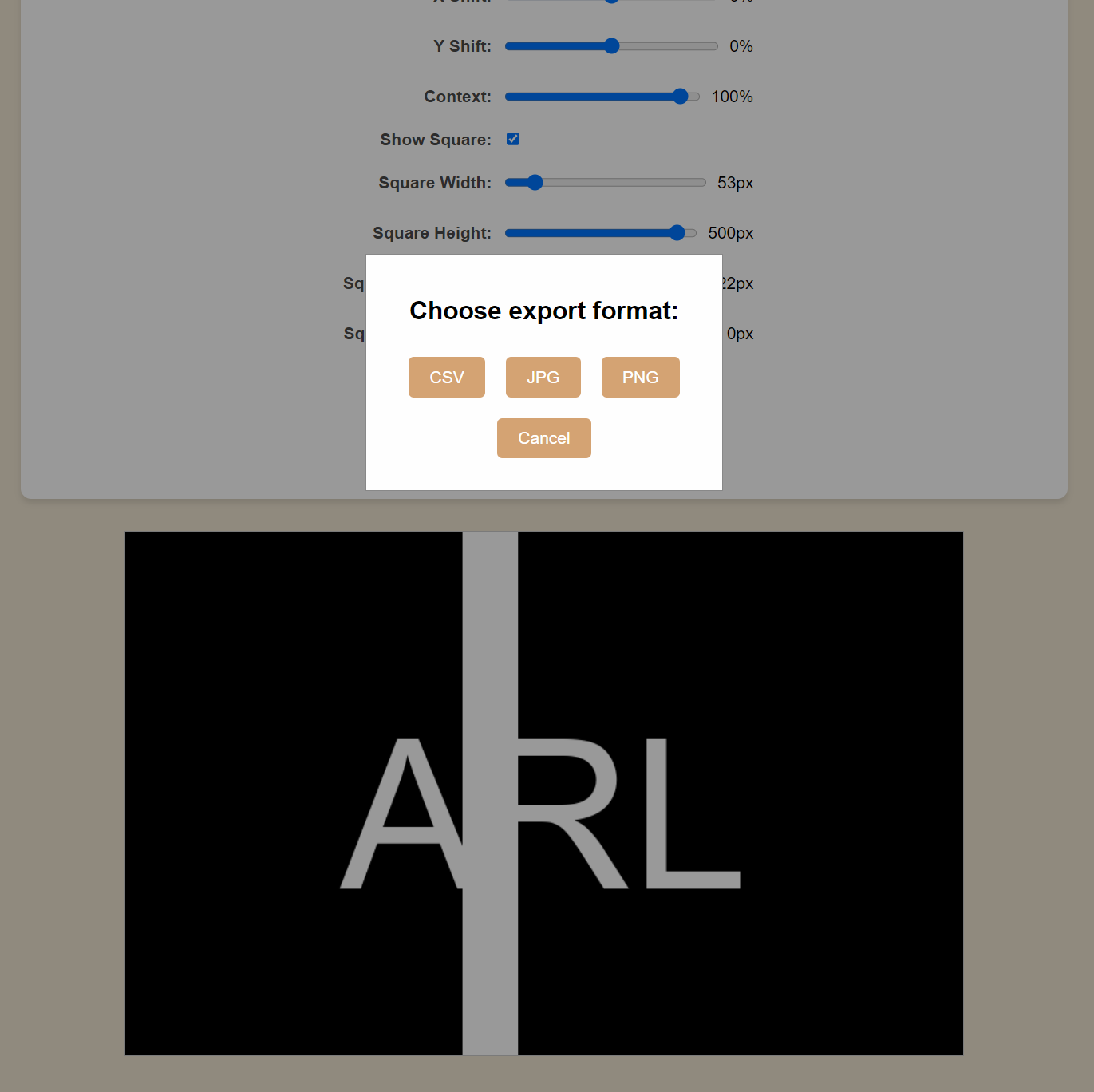

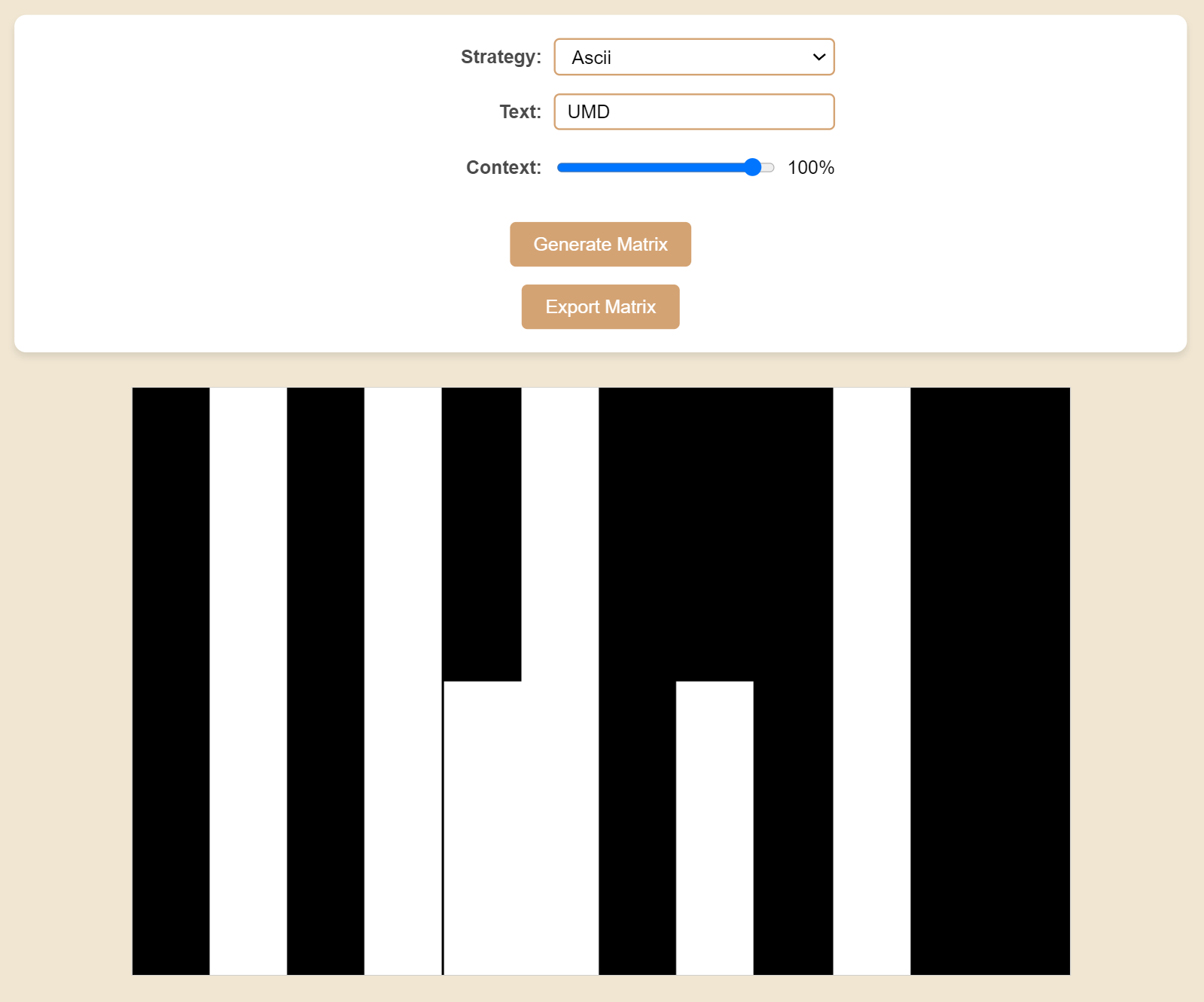

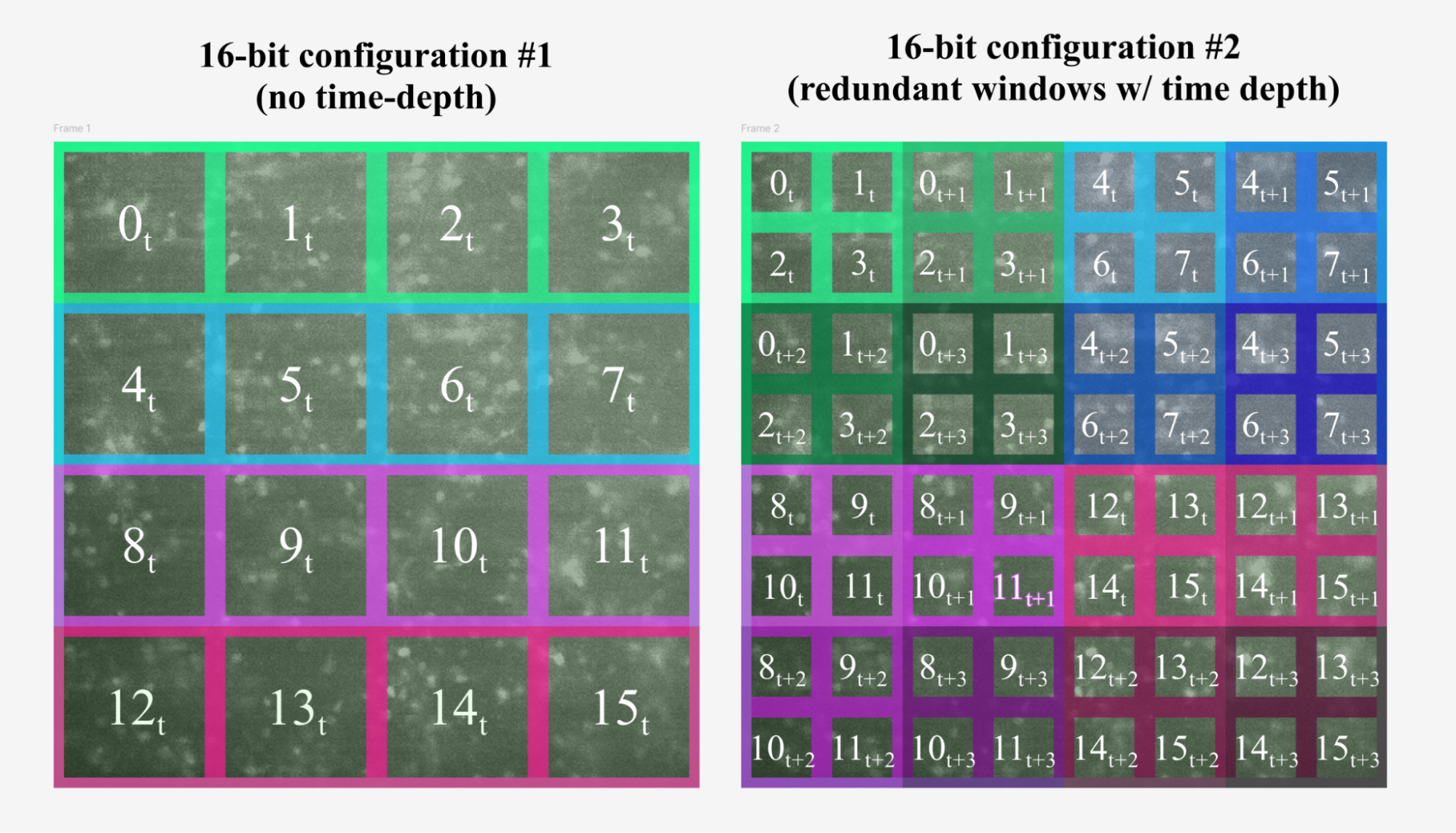

Built a closed-loop neural imaging system that processed live brain microscopy video at 20Hz, meaning the software ingested a new frame of brain activity data every 50 milliseconds, detected which neurons were firing, and triggered optical stimulation back onto the brain tissue within 50ms total latency. The core challenge was a real-time distributed systems problem: calcium imaging produces continuous high-frequency video streams where each frame contains intensity data for 40+ regions of interest (individual neurons), and the system needed to track activity patterns, compute signal transformations, and coordinate hardware responses fast enough to influence the neural activity it was observing. Engineered the Python backend using Redis pub/sub for data streaming between pipeline stages, WebSocket protocols for low-latency communication, and an asyncio event-driven architecture coordinating concurrent operations across the acquisition, processing, and stimulation modules. Implemented ΔF/F calculations, a standard transformation for detecting neural firing from raw fluorescence signals, using uniform_filter1d with a 40-frame sliding window algorithm, with Dask distributing parallel computation across batch aggregations of 20 frames and deque-based buffers enabling simultaneous tracking of calcium dynamics across all monitored neurons. Developed a Flask/React web application that controlled a Digital Micromirror Device, essentially an 800×500 pixel optical projector that could shine precisely targeted light patterns onto brain tissue to activate specific neurons. The interface supported dynamic matrix generation with configurable ASCII/binary encoding and 8-bit intensity control, plus multi-modal stimulus inputs for running context-dependent learning experiments in neural plasticity research. Built Echo State Network machine learning models using ridge regression and Tikhonov regularization to predict non-stationary neural responses, achieving 30% improved prediction accuracy on continuous two-photon microscopy streams. Contributions acknowledged in resulting publication.

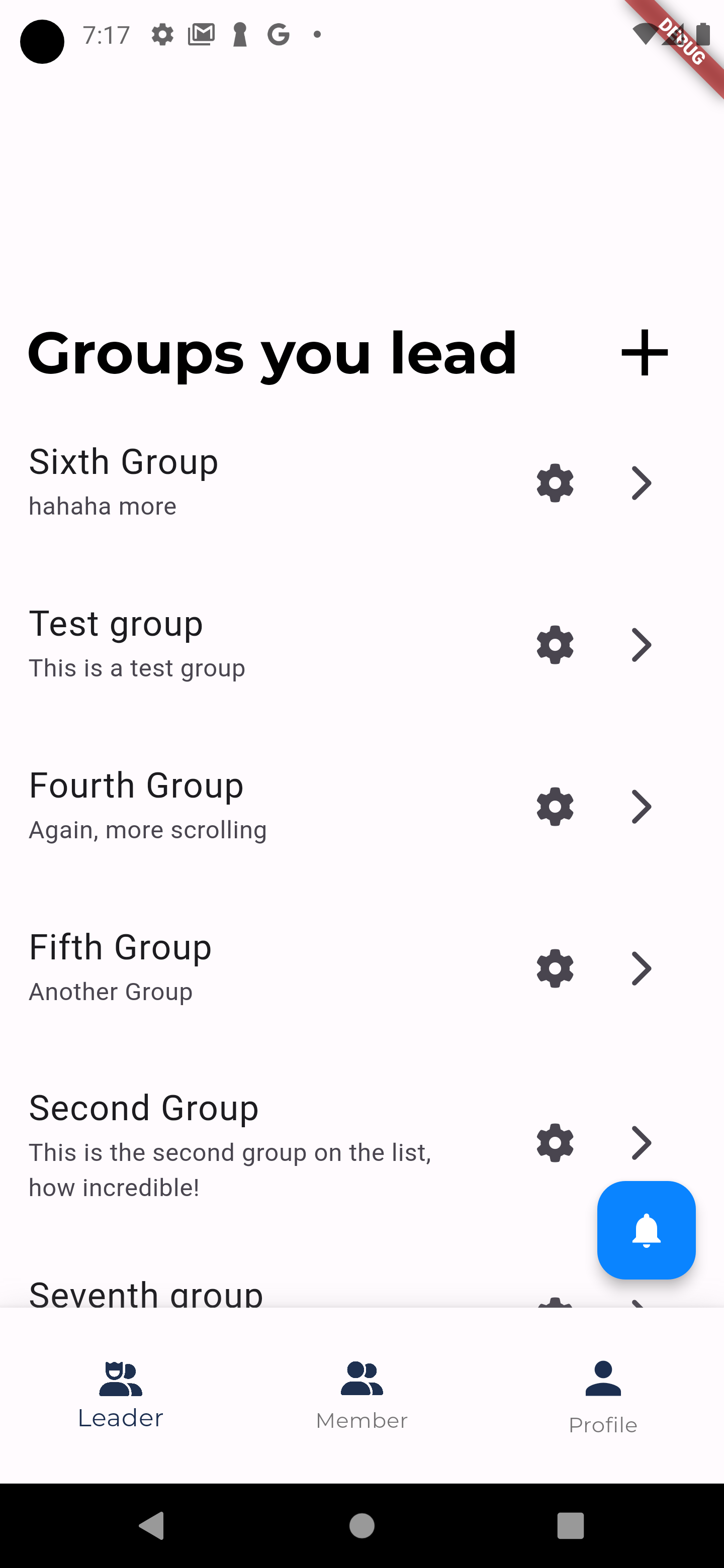

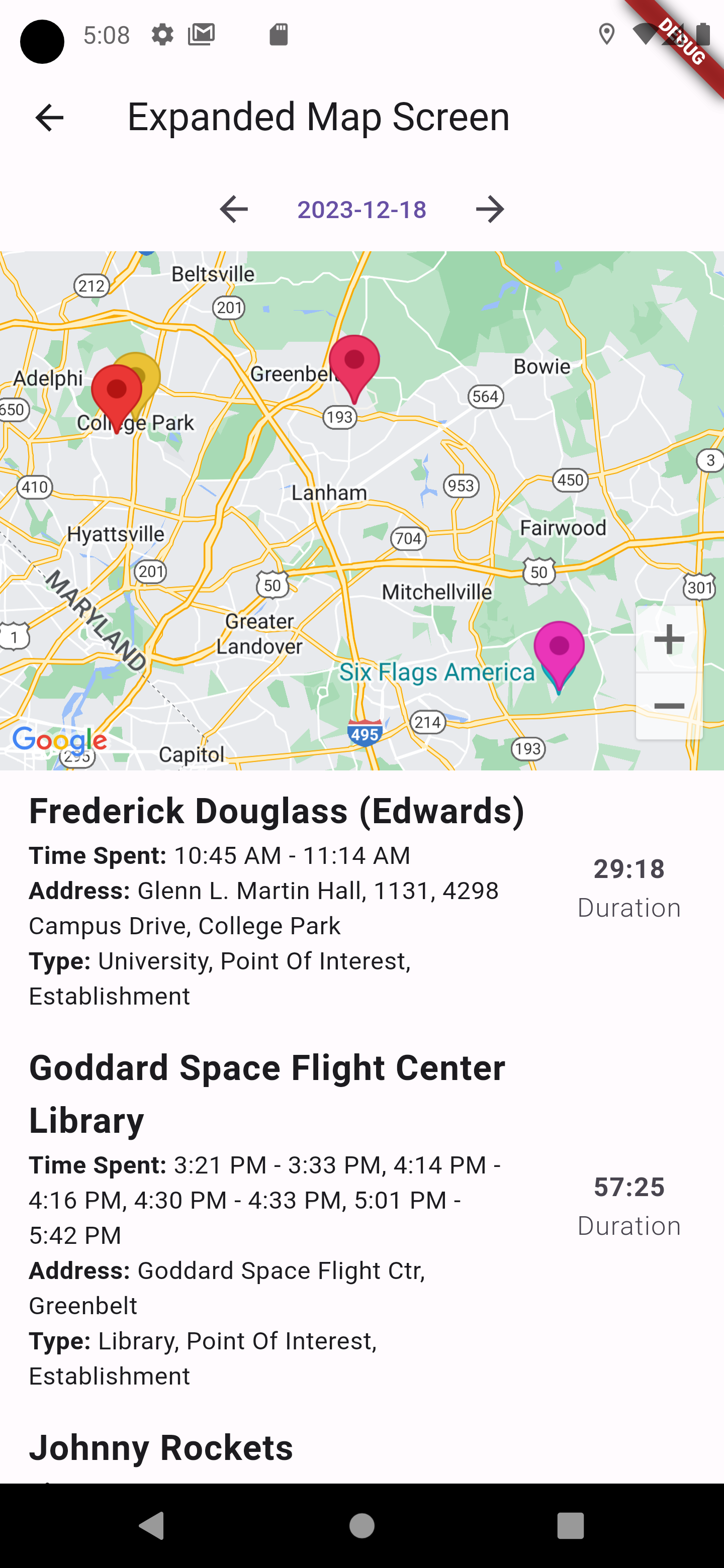

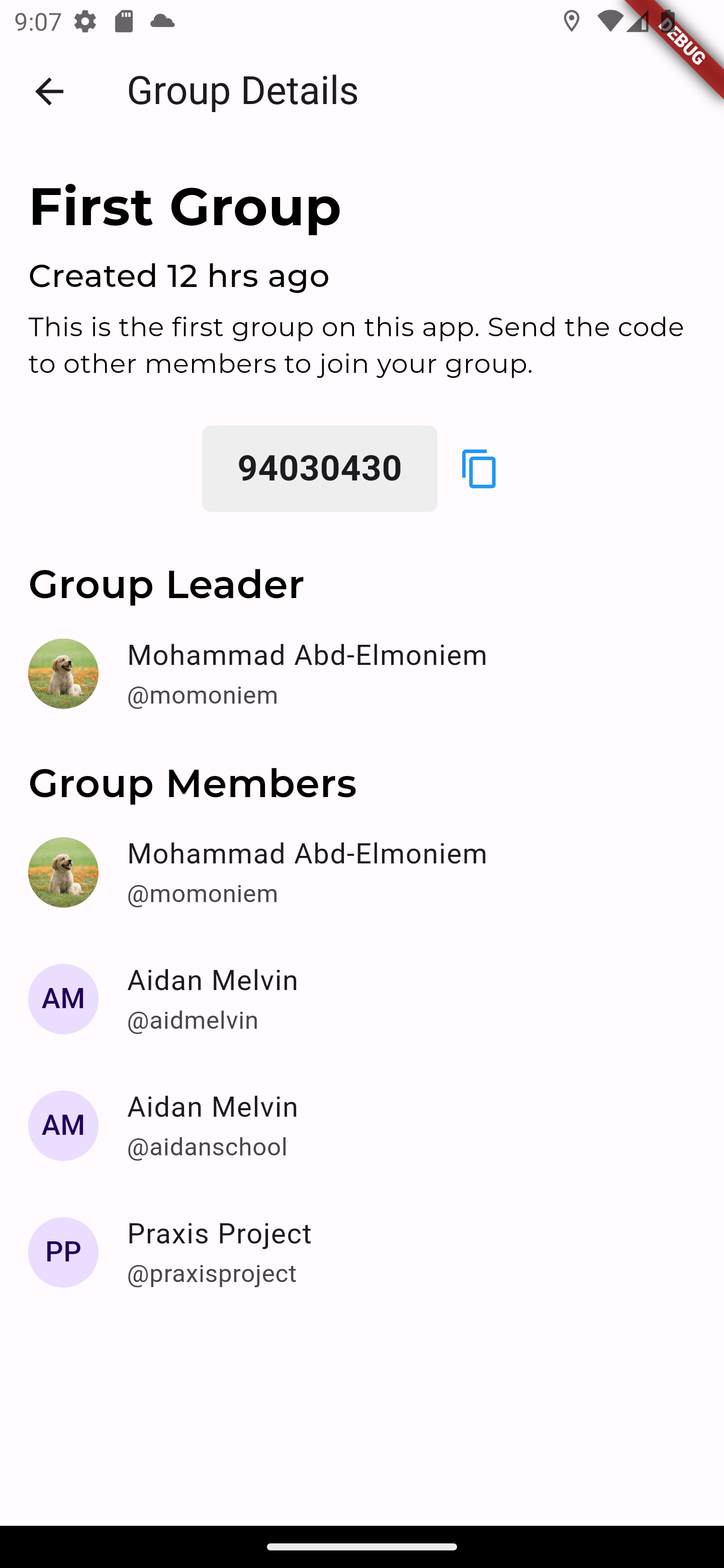

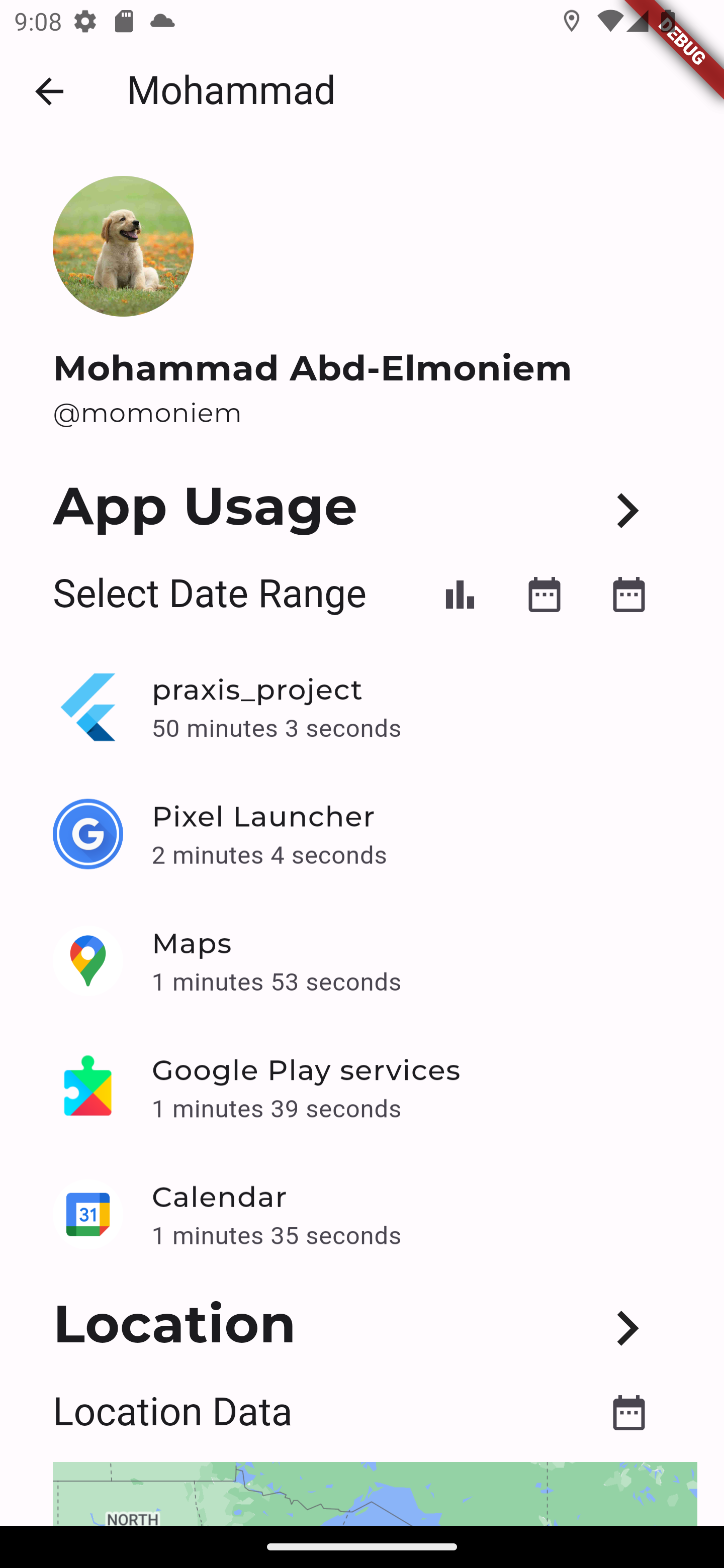

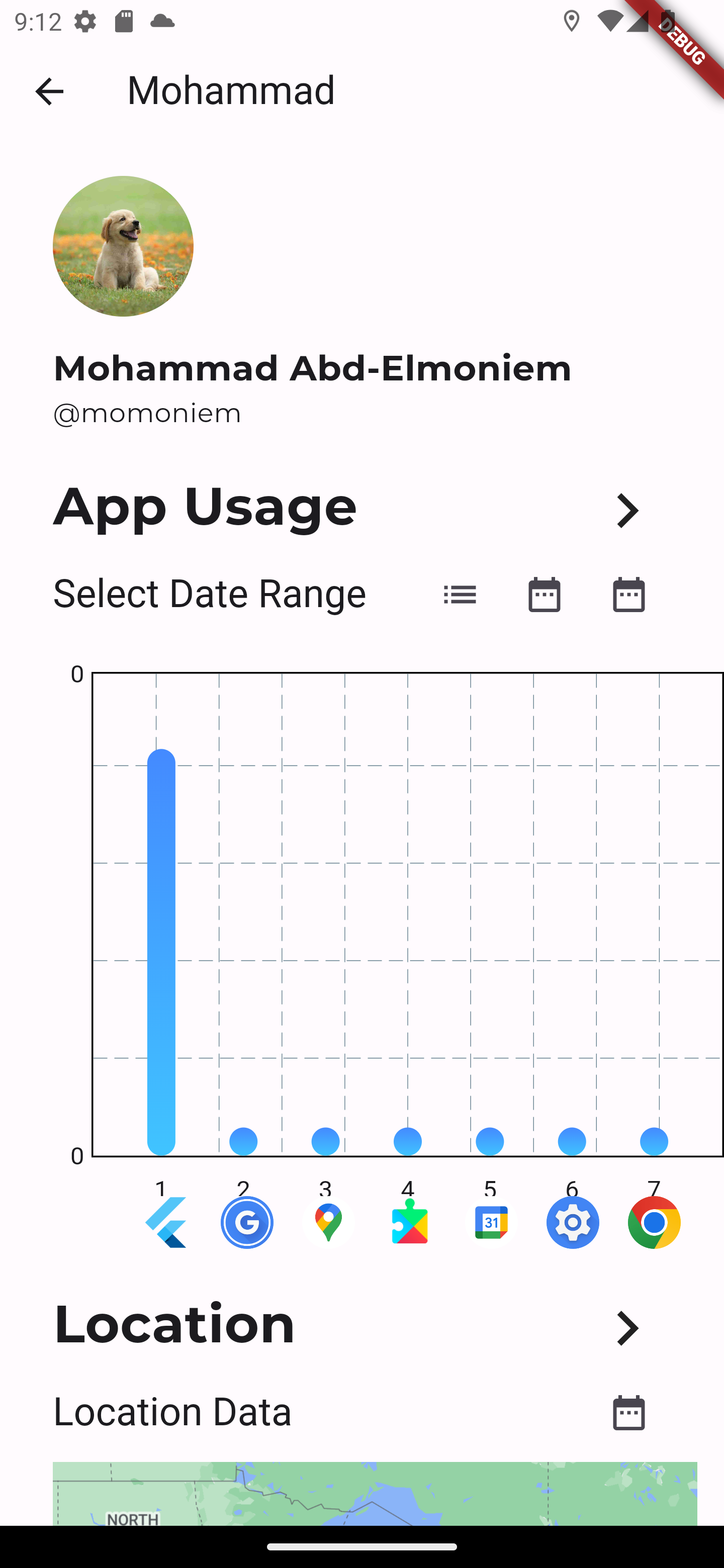

Led two teams of approximately 8 engineers each across two semesters and two separate contracts with General Dynamics through a university program, managing full project lifecycles from requirements gathering through deployment using Agile/Scrum methodologies. The first contract delivered a group coordination platform designed for real-time team tracking and communication. Built the frontend in Flutter for cross-platform mobile deployment with Firebase handling cloud synchronization and real-time data streaming across devices. Implemented geolocation analytics integrating Google Maps API with haversine distance calculations and k-means clustering algorithms to visualize movement patterns and group proximity on interactive dashboards. Developed an NLP pipeline using TensorFlow and Flask deployed on Google Cloud Platform that parsed incoming emails to automatically extract topics and summarize content, reducing manual triage for users managing high-volume inboxes. Added calendar-based automation features including an automated “do not disturb” system linked to Google Calendar APIs for smarter notification management.

The second contract produced an interactive onboarding application, essentially a Kahoot-style quiz platform for enterprise new hire training where administrators could create timed questions and track completion across cohorts. Architected the backend with FastAPI and MongoDB, structuring the Flutter frontend around MVVM patterns to keep business logic separated from UI components and maintain clean testability as the codebase grew. Set up CI/CD pipelines for automated builds and deployments, coordinated code reviews and integration testing across the team, and managed collaboration through Git/GitHub for version control, Slack for communication, and Monday.com for sprint tracking. Both applications shipped to production demonstrating full-stack mobile development, cloud infrastructure, and applied machine learning in enterprise contexts.

Projects

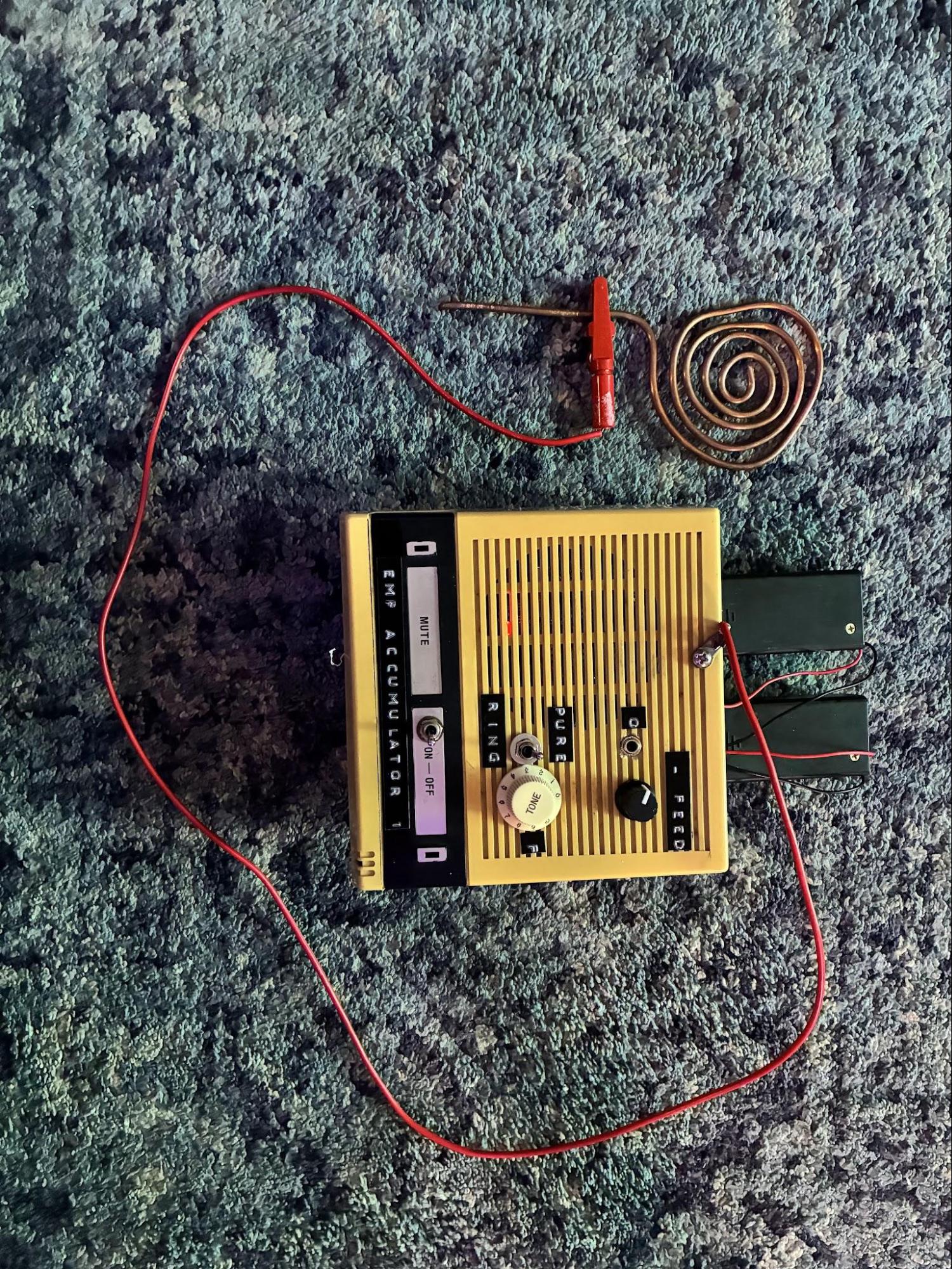

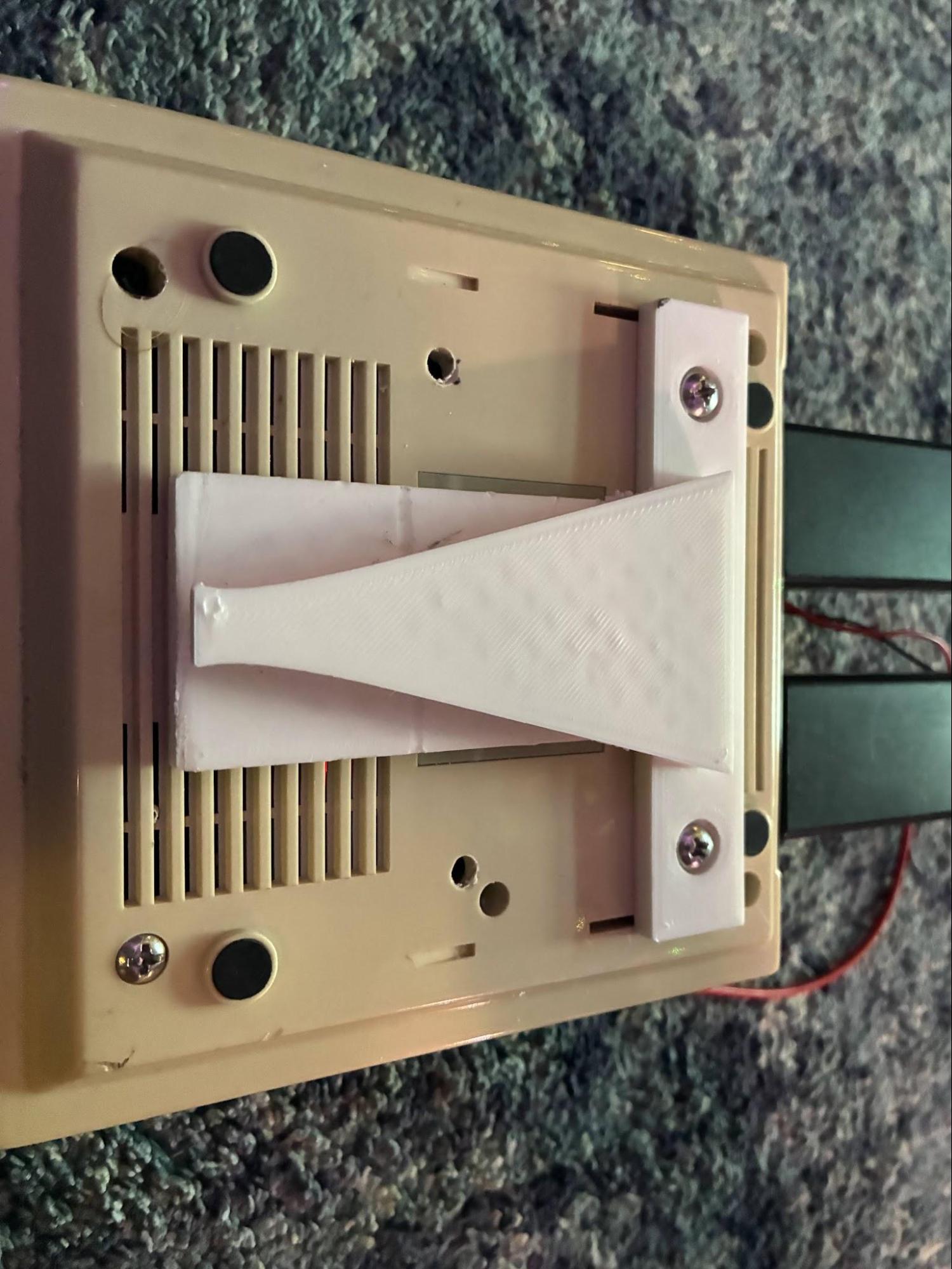

Capstone project for ENEE408J (Audio Electronics Engineering) at the University of Maryland, built with a team of three. The Spatial Wave Accumulator is a portable analog device that captures ambient electromagnetic radiation from everyday sources like power adapters, transformers, CRT TVs, and lighting systems and converts those invisible EM emissions into audible audio signals that composers, sound designers, and performers can use to create drone-like soundscapes. A spiral antenna feeds into an LC resonant tank tuned to 0.43–0.97 MHz, then through a germanium diode envelope detector that demodulates the captured RF into audible frequencies below 3.3 kHz. The demodulated signal passes through a variable-gain NE5532 amplifier (1–21x), and the user can either output the raw drone directly or route it through a diode ring modulator driven by a variable-frequency oscillator to add tonal complexity and frequency-shifted textures. The whole device runs off two 9V batteries and is housed in a repurposed vintage intercom box with a 3D-printed belt clip for portability. My contributions focused on ring modulator assembly, testing, and redesign, building and testing the oscillator prototype, designing the enclosure modifications and belt clip in CAD for 3D printing, and antenna construction and testing across different shapes and EM sources.

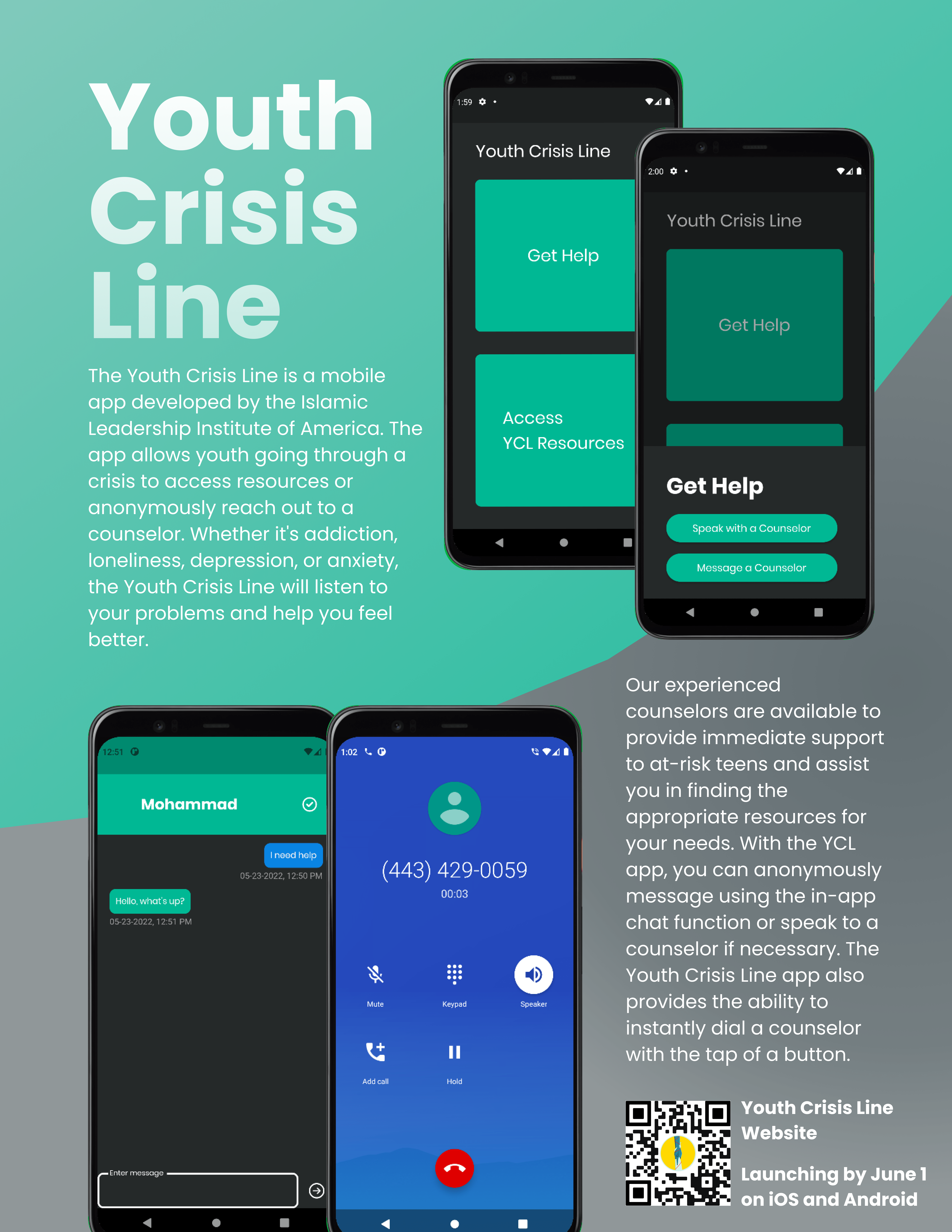

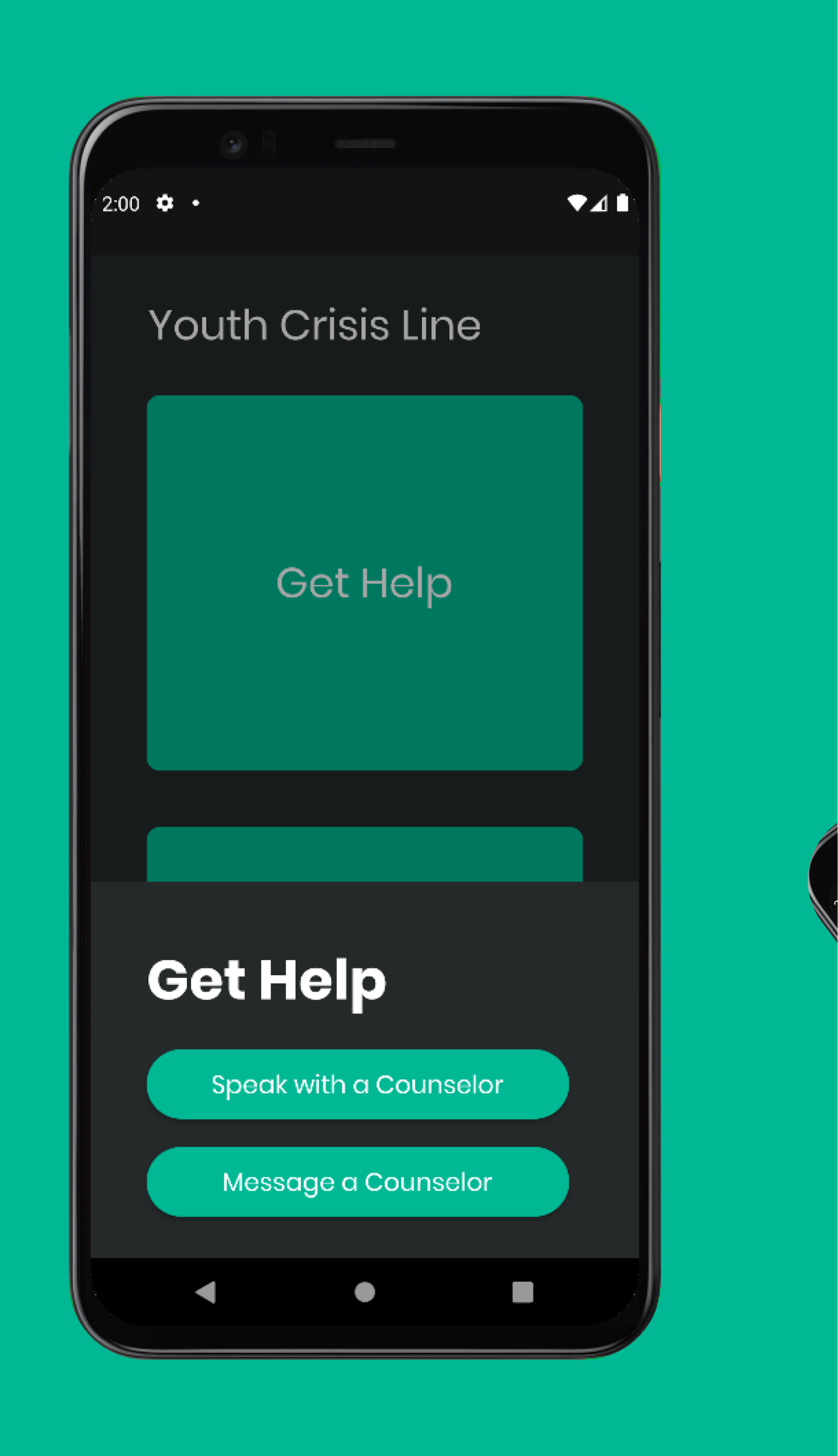

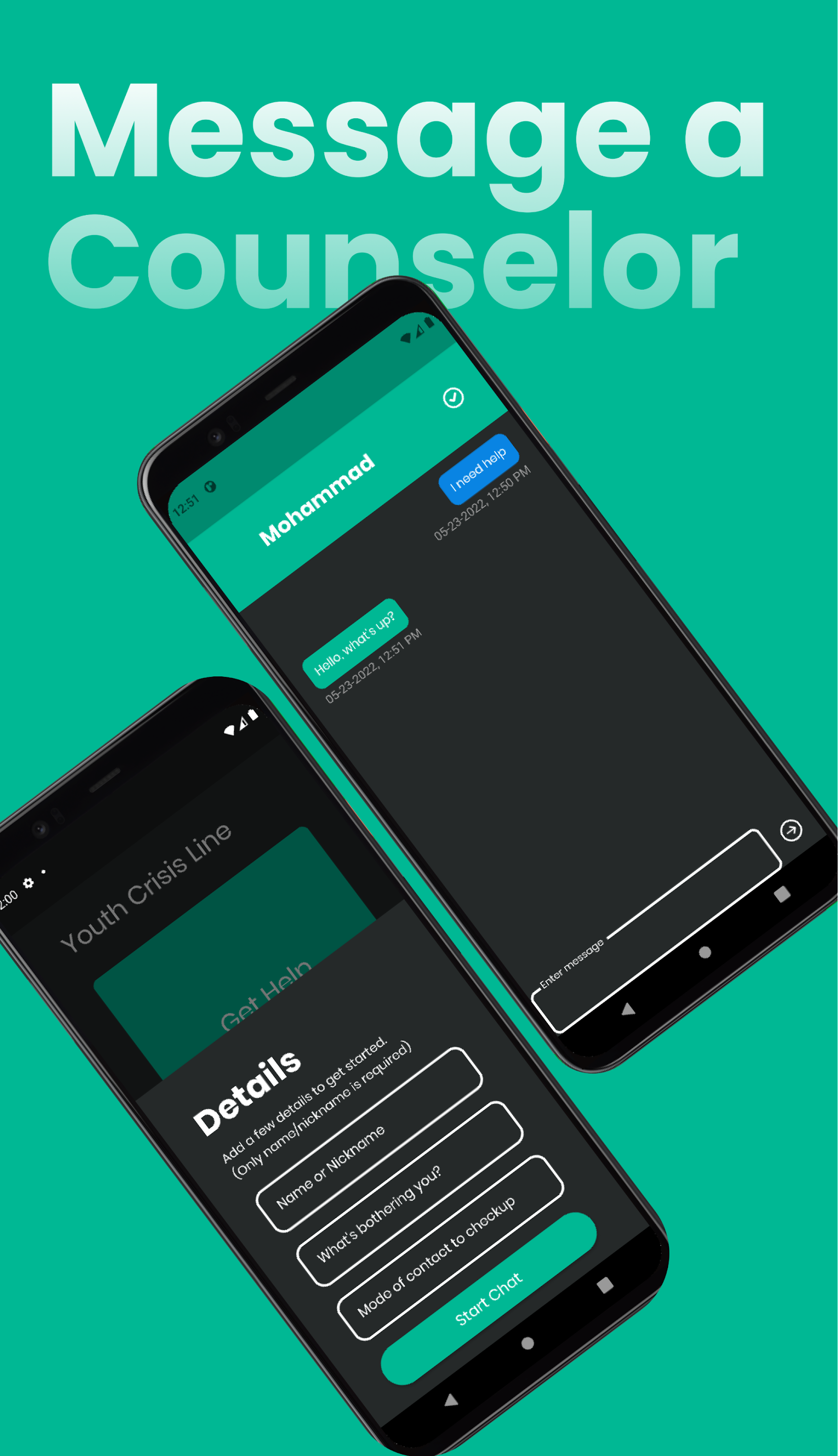

Volunteer project for a local youth organization that needed a way for young people in the community to reach out for help, either through a phone hotline or an in-app messaging service. Built four mobile applications in Flutter and Dart for iOS and Android in just two days, from initial development through designing the Google Play Store flyers to full deployment. Architected a secure authentication system with login, registration, email verification, password recovery, and data deletion to comply with privacy requirements for a youth-facing platform. Implemented an AES-encrypted chat module backed by Firebase Realtime Database for end-to-end encrypted communication, ensuring confidentiality for users reaching out in sensitive situations. Handled the full application lifecycle including CI/CD pipelines, beta testing, and App Store Optimization for launches on both the iOS App Store and Google Play Store. Set up Firebase cloud functions and Firestore security rules for real-time data synchronization across the apps, with Material Design interfaces tailored to the organization's branding.

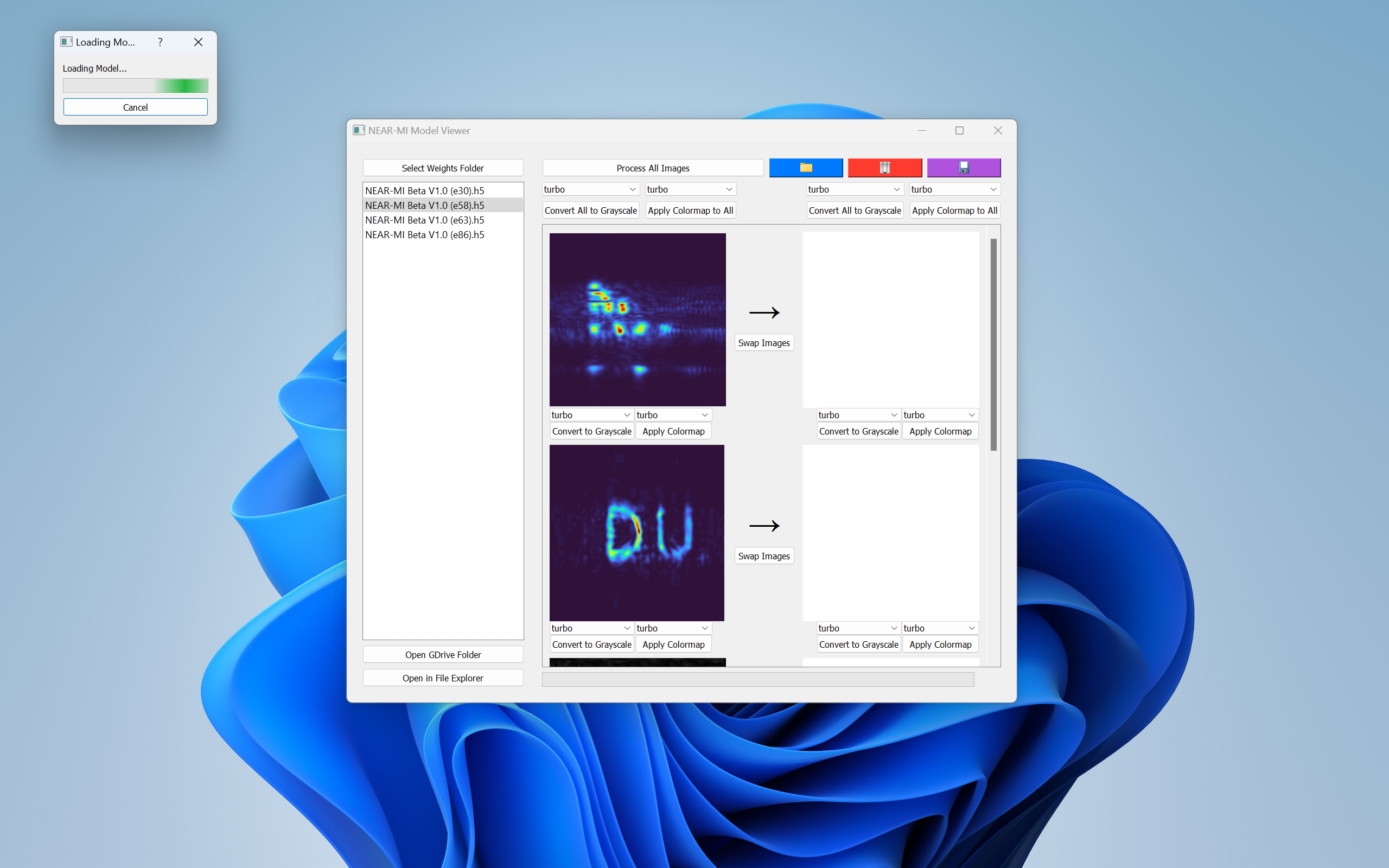

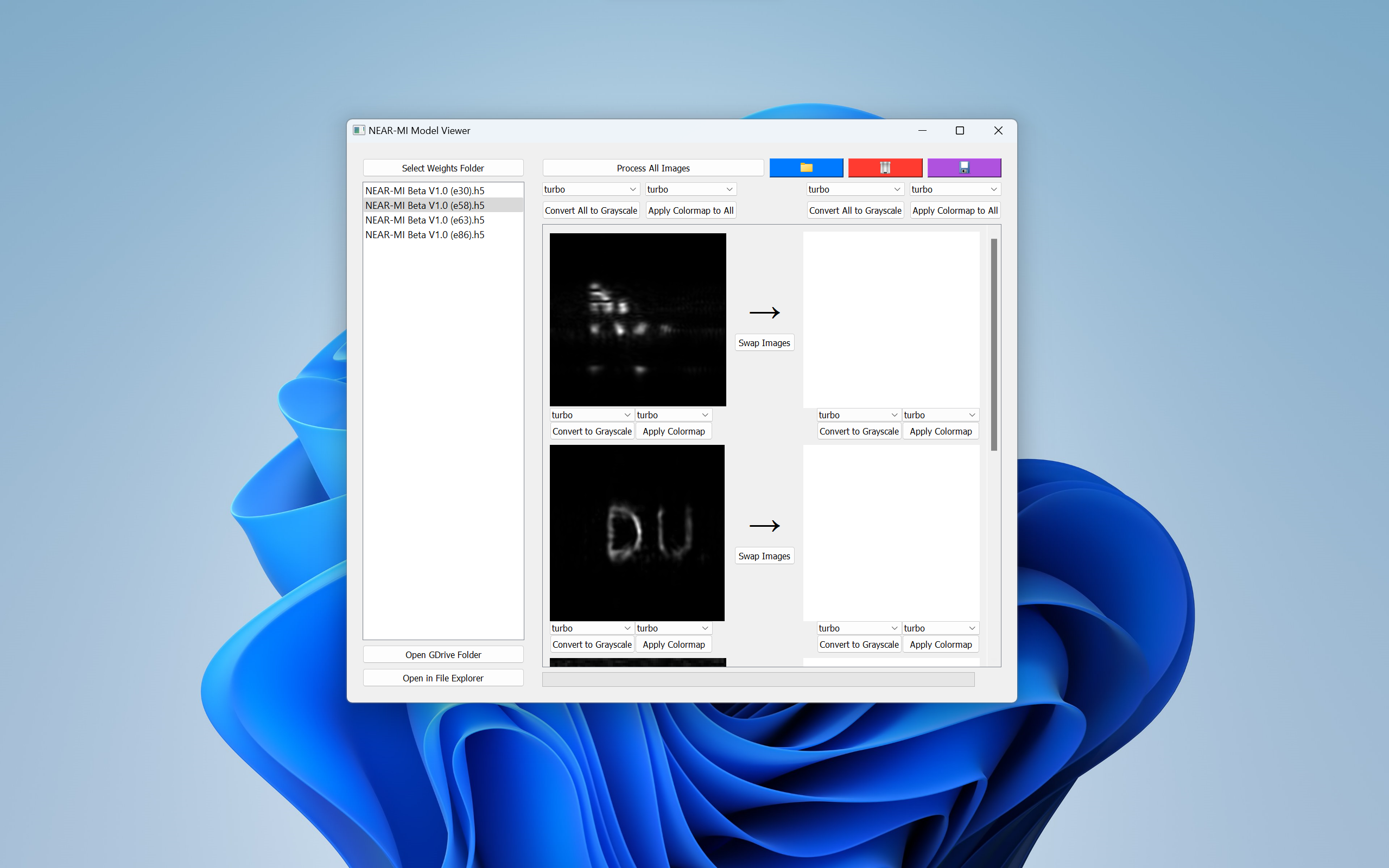

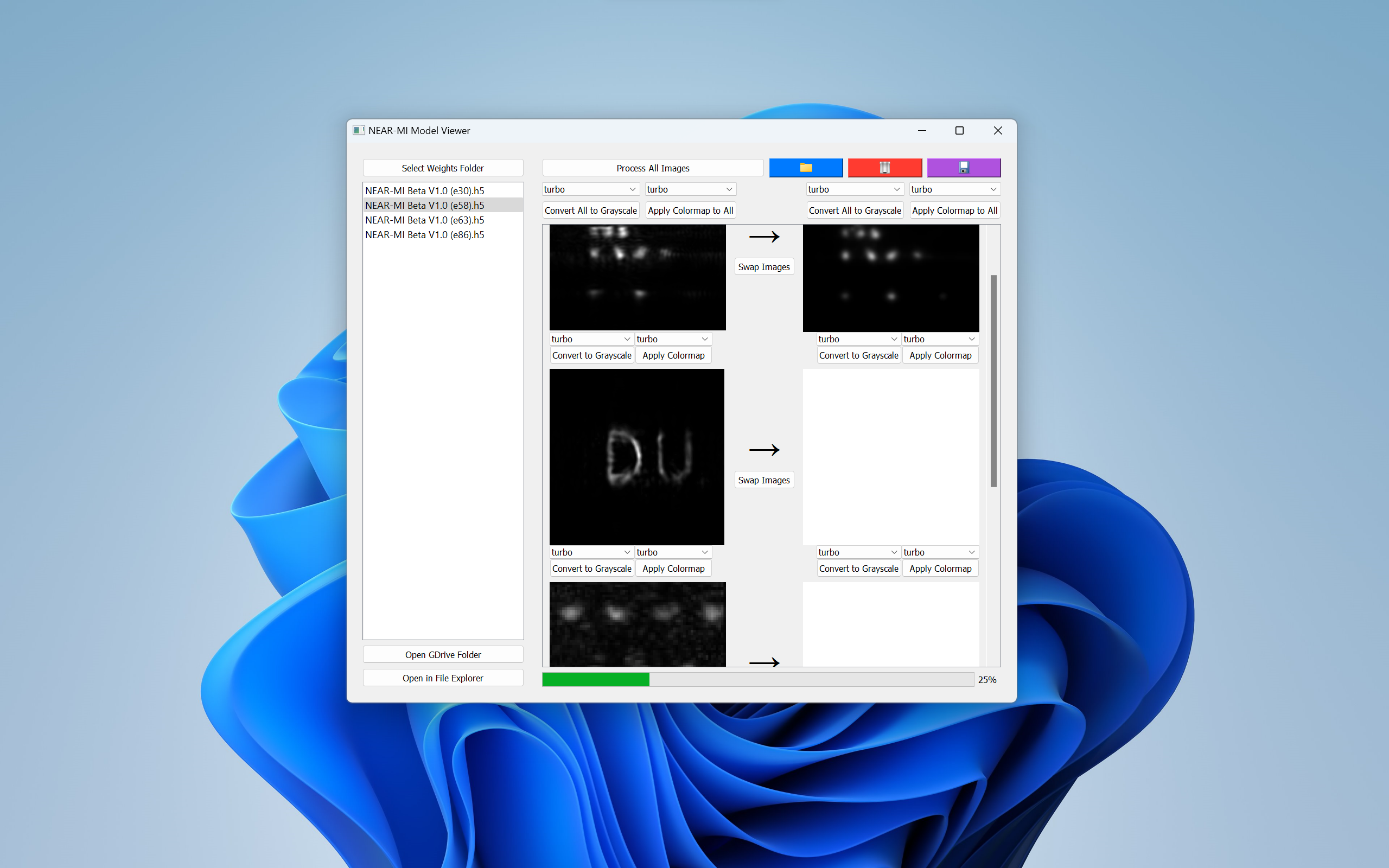

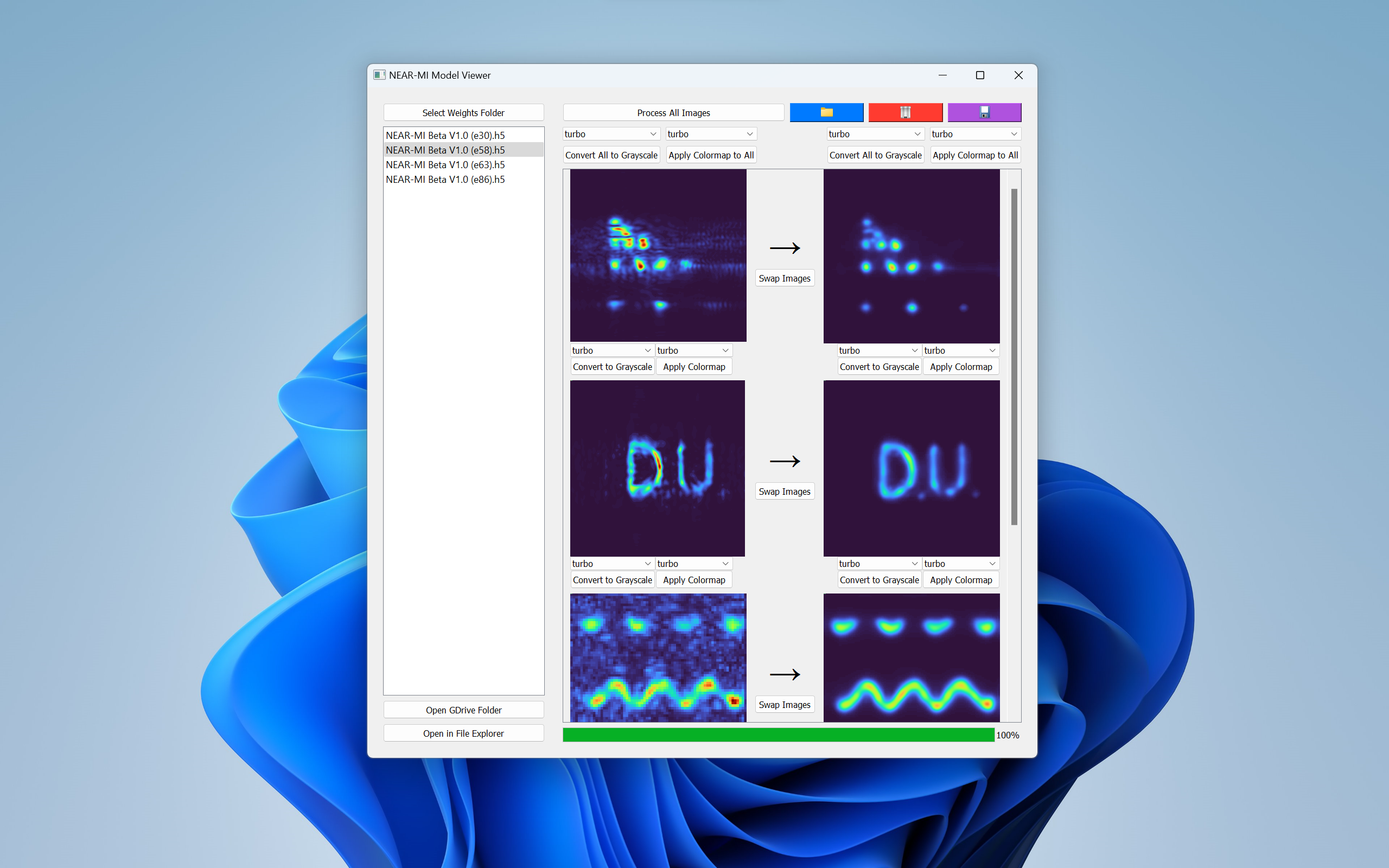

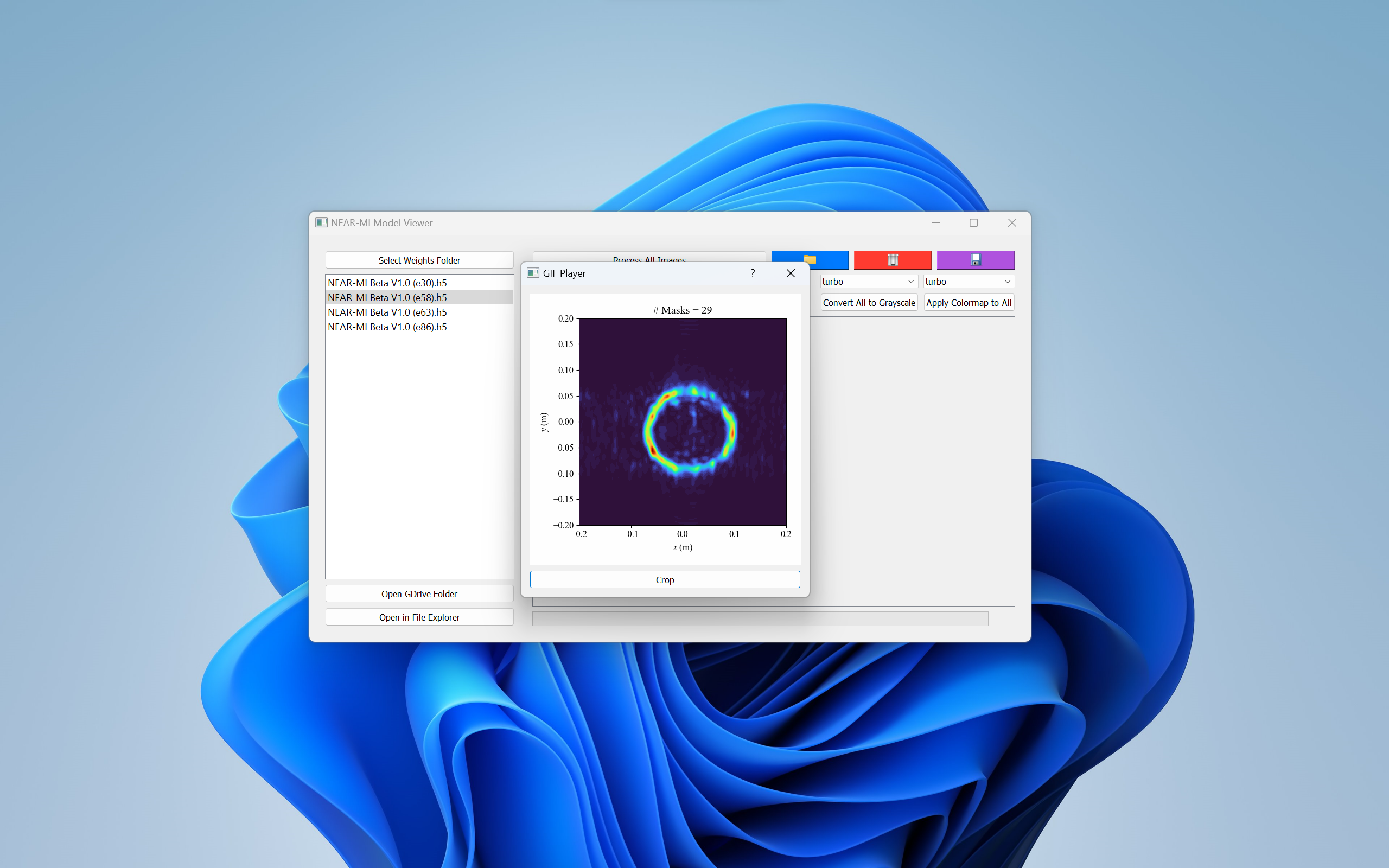

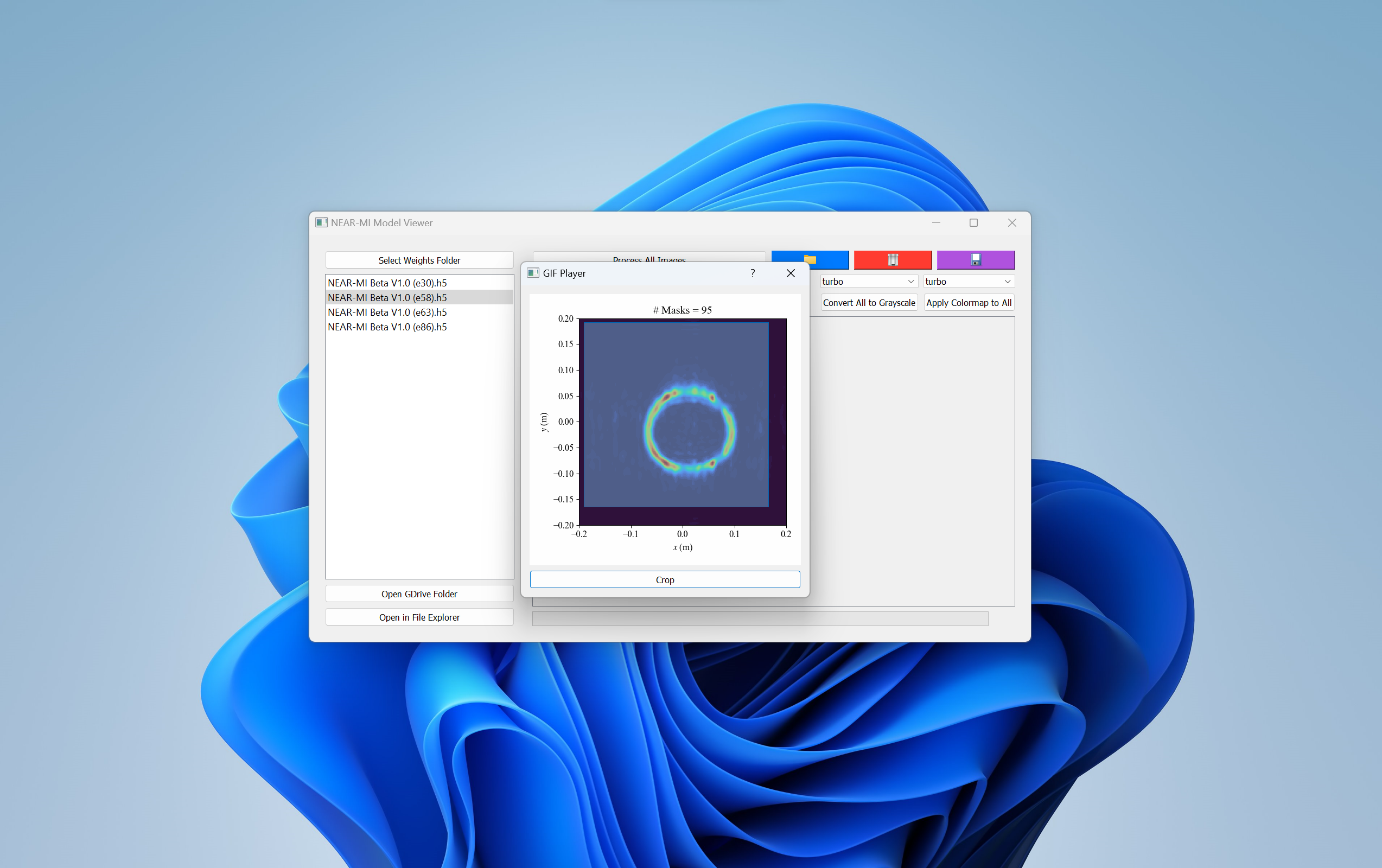

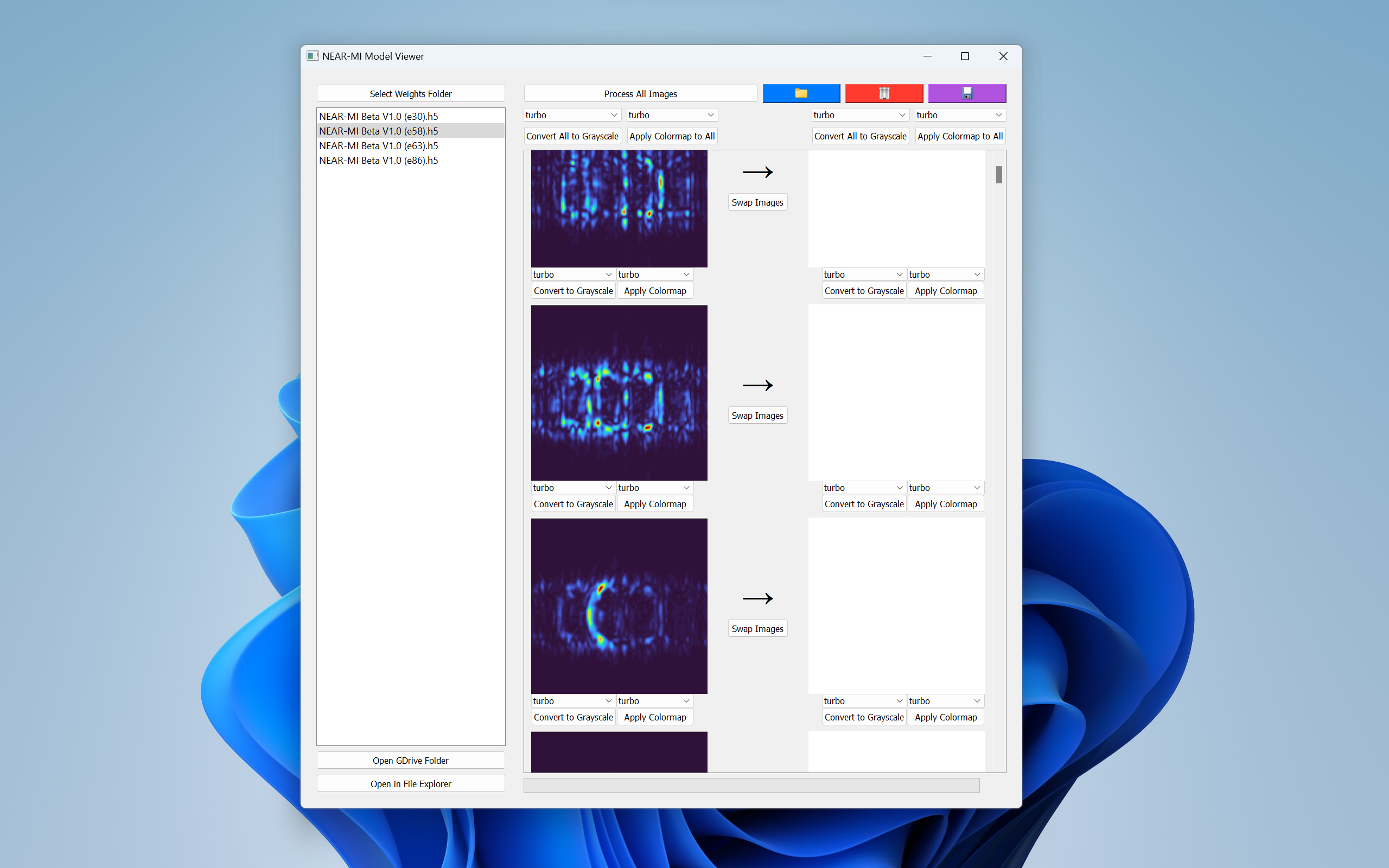

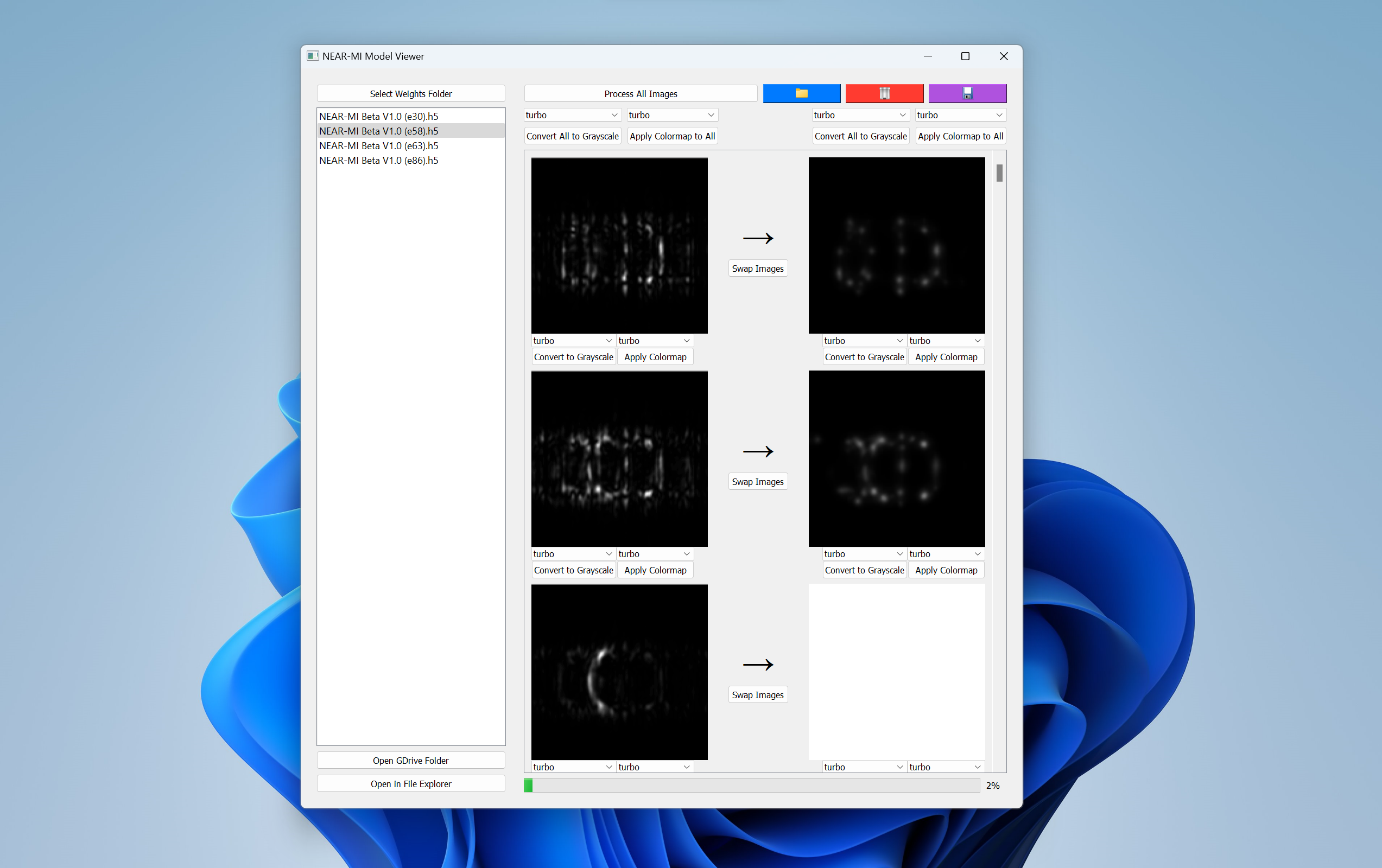

Built during my Duke research because I got tired of evaluating model iterations through terminal output and loss curves. When you're training neural networks for image reconstruction, quantitative metrics only tell part of the story—you need to actually see what different model versions produce on the same inputs to understand what's improving and what's regressing. I wanted a tool where I could load any saved model weights, point it at a folder of test images, and immediately compare outputs across different checkpoints without writing throwaway scripts every time. Built a PyQt desktop application that loads Keras models from h5 files with support for custom layers and objects, processes images in bulk through any loaded model, and lets you toggle colormaps and inversion to inspect reconstruction quality. The key feature for my workflow was GIF generation: the through-wall imaging scans I worked with had temporal progression, so I built export functionality that stitches processed frames into animated GIFs showing how the model reconstructs a target as the antenna moves across it. Supported multiple input formats including raw images, CSV, pickle, and text files, with batch export as composite images, individual files, or animated slideshows. Became the standard tool our team used for visual model comparison during the NEAR architecture development.

During my Duke research I needed the PAA5100JE optical flow sensor to precisely coordinate metasurface antenna positioning, but the only driver library existed in C and our entire research workflow was in Python. There was no Python package for it, so I built one and open-sourced it. The package uses PySerial to establish serial communication between Python and an Arduino microcontroller running Bitcraze's C driver, translating the sensor's raw X and Y movement counts into Python-readable data over a serial bridge. Wrote the Arduino sketch to handle sensor initialization and continuous data transmission, and the Python side handles automatic serial port detection and data parsing to deliver real-time optical flow readings. Packaged the whole thing as a drop-in tool so anyone working with PAA5100JE or PMW3901 sensors in a Python environment can skip the C interop problem entirely.

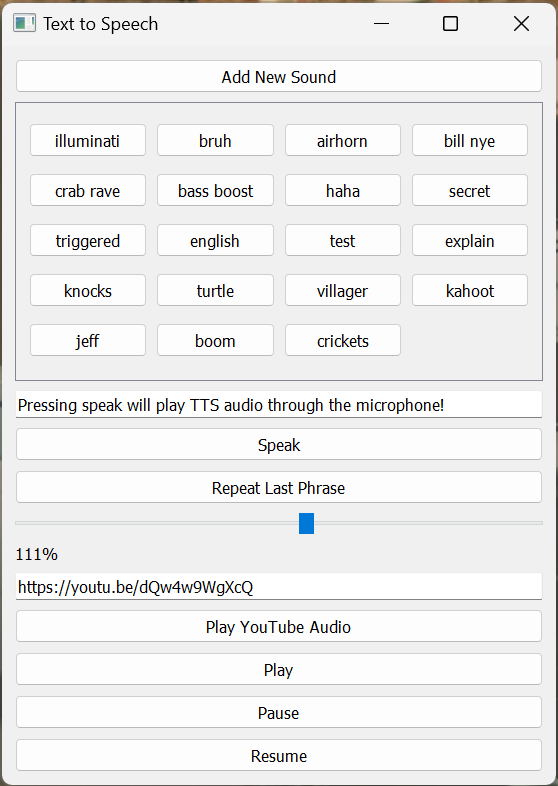

Born out of years of late-night Discord voice calls where I couldn't actually speak without waking up my parents. Instead of typing every message and hoping friends would read it mid-game, I built a PyQt desktop GUI that lets you type messages, converts them to speech via Azure TTS and gTTS, and routes the audio through a VB-CABLE Virtual Audio Device directly into Discord as if you were talking. Friends hear a voice, you never make a sound. Eventually expanded it because it was too fun not to: added a customizable soundboard for dropping audio clips through your mic on demand, and a YouTube player that streams video audio straight into the voice channel. Built the entire interface in PyQt with real-time audio routing between the TTS engine, soundboard, and virtual audio device. The 150 WPM typing speed I developed from years of silent chatting made this project feel long overdue.

Built at 15 as my first major multi-month project before AI coding tools existed, Pointly started from a simple idea: what if parents could turn household chores into a point system where kids actually want to participate? Parents create an account, set up tasks with point values and rewards, post announcements, and invite their children to join as members of the household group. Kids log in, see what needs doing, earn points, and redeem them for rewards their parents define. Built the entire app in Flutter with Dart for cross-platform mobile deployment, using Firebase for real-time database syncing and authentication so changes from any family member show up instantly across all devices. Structured the codebase around MVC patterns to keep things maintainable as features grew, with Cloud Firestore handling the role-based data model separating parent and child permissions. Deployed to Google Play using Gradle for builds and Fastlane for CI/CD automation.

One of my earliest mobile app projects, a food donation platform designed to connect people with surplus food to those who need it nearby. This was a very early project so I don't have much documentation besides a few clips, but the app had real-time location tracking, in-app messaging, and push notifications all working together. Built in Flutter for cross-platform deployment, with Google Cloud Platform's Firestore handling scalable data storage and Firebase Authentication managing user accounts. The core feature was a geolocation matching system using Google Maps API to pair donors with nearby recipients based on proximity, so leftover food from a restaurant or household could find someone who needed it within the area. Integrated RESTful APIs for data flow between the app and backend services, and designed the UI around making the donation process as frictionless as possible for both sides.

Built in 2022 when TensorFlow Lite was well-established on native Android and iOS, but the Flutter side was a different story. The tflite_flutter plugin was only a couple years old, documentation was sparse, and there were very few examples of actually getting on-device ML inference working end-to-end inside a Flutter app. This ended up being a series of projects figuring out that full pipeline from scratch. Built three separate apps: a fruit and vegetable identifier, a pet breed classifier, and a handwriting recognition tool. For each one, I collected and curated a small dataset of around 700 images, set up preprocessing pipelines with data augmentation and feature scaling to stretch what limited training data I had, trained CNN models in TensorFlow, then converted and quantized them down to TF Lite format to actually run on a phone without killing performance or memory. The real challenge was getting the inference bridge working between the TF Lite runtime and Flutter's Dart layer, handling camera input, model loading, and prediction output all within mobile resource constraints.

Education

- Graduated at Age 18 - Featured

- Co-founder and Tech Lead at the App Dev Club

- Chief Executive Officer of AI/ML Club

- Secretary of Cloud Computing Club

- Bitcamp 2023 and 2024 Workshop Presenter and Mentor

- Member of IEEE @ UMD

- Member of Google Developer Student Club

- Member of NeuroTech @ UMD

- Member of XR Club @ UMD

- Member of Philosophy Club @ UMD

- Co-founder and Vice President of the Gaussian Club of Mathematics

- 2nd place Student AMATYC Math League

- Full ride Promise Scholarship and Club Leader Award recipient

- STEM Week presenter

- Member of Phi Theta Kappa Honor Society

- Member of Student Research Club

- Member of Diverse Male Student Initiative

Relevant Coursework

Get in Touch

Have an opportunity, a question, or just want to connect? I'd love to hear from you.